Raised 500 million yuan in financing, a post - 90s Tsinghua University doctoral supervisor develops robots. "There are many misunderstandings about us from the outside world."

Text by | Qiu Xiaofen, Su Jianxun

Edited by | Su Jianxun

"There is indeed a certain gap between the outside world's perception of us and our actual business situation." In the Beijing office of "Star Era", founder Chen Jianyu told "Intelligent Emergence".

"Star Era" was founded in August 2023 by Chen Jianyu, an assistant professor at the Institute for Interdisciplinary Information Studies of Tsinghua University. On July 7, 2025, "Star Era" announced the completion of a nearly 500 million yuan Series A financing, jointly led by CDH CGV Capital and Haier Capital, followed by Houxue Capital, Huaying Capital, Xianghe Capital, Fengli Intelligent, etc. Old shareholders such as Qingliu Capital and Tsinghua Holdings Fund continued to increase their investments.

Although it has been established for only two years, in the robot hardware business, "Star Era" has successively released products such as dexterous hands, wheeled robots, and full - size humanoid robots. These developments have led many people to mistakenly regard Star Era as a robot body company, and even 'think we are a dexterous hand company'.

This is not the label that Chen Jianyu hopes the company will be tagged with.

Building a general - purpose, intelligent robot was the goal that Chen Jianyu set nearly a decade ago when he saw AlphaGo. This means that a robot not only needs a torso but also a "brain" to handle different scenarios.

"Doing both the 'brain' and the body at the same time may seem very difficult, but for me, since I can do both, it is a natural choice." Chen Jianyu told "Intelligent Emergence".

Among the founders of embodied intelligence, Chen Jianyu has a rare cross - field research background. His past academic directions have covered both the 'body' and the 'brain'.

In 2011, Chen Jianyu was recommended to study at the Department of Precision Instrumentation of Tsinghua University, one of the earliest domestic institutions engaged in the research of bipedal humanoid robots. During his doctoral studies at the University of California, Berkeley, he began to study MPC (Model Predictive Control) and end - to - end reinforcement learning, which are also important technical routes for the current "brain" of embodied intelligence.

In fact, compared with the hardware layer, Chen Jianyu's research achievements in the field of robot algorithms are more remarkable. He proposed a new - generation learning algorithm framework DWL for humanoid robots and was nominated for the Best Paper Award at RSS, the most difficult top - tier conference in the robot field. His original embodied large - model algorithm VPP that integrates generative world models was selected as a Spotlight paper at ICML, the most top - tier conference in artificial intelligence.

During a three - hour interview with "Intelligent Emergence", Chen Jianyu spent half of the time discussing algorithms and the "brain" with us.

However, having only algorithms or only the body is not the path that Chen Jianyu believes can achieve the goal of a "general - purpose humanoid robot". What he needs is a "system", which includes two general - purpose architectures, hardware and software. Among them:

At the software level, "Star Era" released the VLA model ERA - 42 that integrates understanding and generative capabilities. This robot "brain" integrates a world model and can deeply understand the world and make real - time predictions.

At the hardware level, "Star Era" is developing general - purpose, modular robot products. Robots can be as flexible as Lego, changing their forms according to different scenario requirements, including bipedal, wheeled, and humanoid forms.

In addition, since the current robot supply chain is not perfect, "Star Era" conducts self - research starting from the smallest units of the robot body, such as joint modules, control units, motors, reducers, etc.

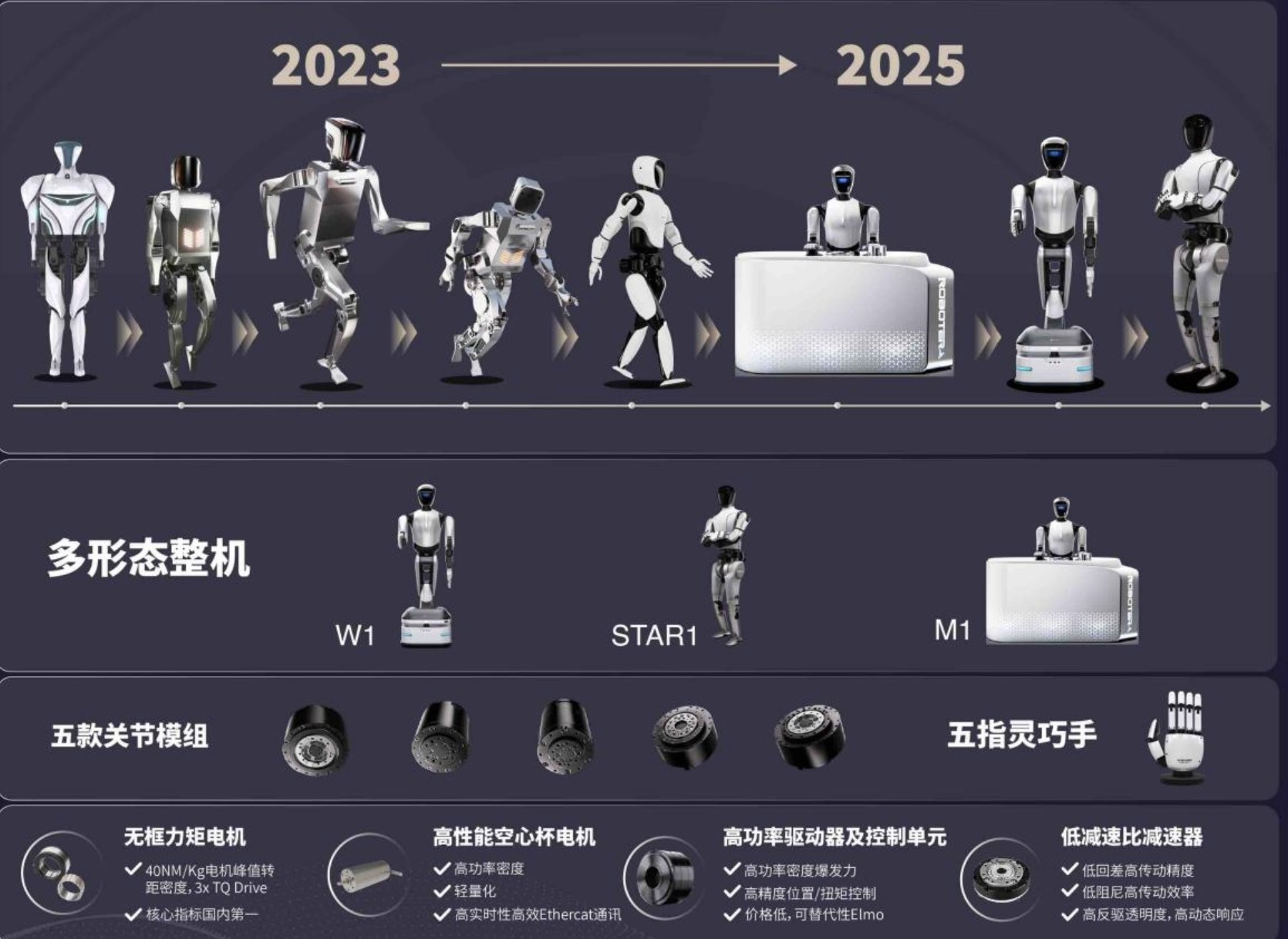

When the software and hardware architectures mature, it will be more agile to expand the business in terms of what functions the robot can achieve and what form it takes. This also explains why "Star Era" has made rapid progress in the body layout: currently, its humanoid robot business has launched three mature products for customers: the five - finger dexterous hand XHand 1, the wheeled service humanoid robot Q5, and the full - size humanoid robot STAR 1.

Product line of Star Era. Image source: Company official

In terms of the commercialization strategy, Chen Jianyu likes to talk about a concept: "Laying eggs along the way". For example, he believes that once the robot dexterous hand is developed, it can be sold first instead of waiting for the whole machine. This is not only beneficial for gradually reducing the hardware cost but also for obtaining certain data to form a data flywheel and feed back into R & D.

According to Chen Jianyu, currently, 9 out of the top 10 global technology giants by market value are their customers. As of June, "Star Era" had delivered more than 200 products in 2025, and there were also hundreds of orders in the process of mass production and delivery. The customer list of "Star Era" includes Haier Smart Home, Lenovo, Beizhi Technology, etc.

Recently, "Intelligent Emergence" had a long conversation with Chen Jianyu. He shared various thoughts on algorithms, body products, and commercialization in the robot field. Many academic studies of Chen Jianyu's team involved in the conversation are also appended at the end of the article. The following is the edited interview transcript:

Build the body or the brain? "This has never been a question."

Intelligent Emergence: Judging from your previous academic directions at Tsinghua and Berkeley, you have research experiences in both the "body" and the "brain". This is relatively rare among the founders in the embodied intelligence circle. When choosing the entrepreneurial direction, did you consider only focusing on the body or the brain? Or, was this choice a problem for you?

Chen Jianyu: This has never been a problem for me. It is mainly based on two judgments:

First, is it necessary to work on both the body and the brain? The answer has been clear from the beginning. If there is only the body without the brain, the robot is just scrap iron. If there is only the brain without the body, it is not a robot. To achieve a closed - loop commercialization in the end, we must deliver a product that integrates hardware and software to customers.

Second, do we have the ability to do both? Doing both the brain and the body at the same time may seem very difficult, but for me, since I can do both, it is a natural choice.

Looking at my personal experience in the past decade, I first worked on robot hardware and electromechanical systems. During my doctoral studies, I engaged in the combination of hardware and software and various control tasks. Later, I moved into the field of AI. I have been involved in robot AI for nearly a decade. I started researching in this area around 2016 and 2017, around the time of AlphaGo.

Intelligent Emergence: How did the emergence of large AI models in 2022 affect your subsequent work direction?

Chen Jianyu: We have gone through several stages:

The first stage was to combine language models with existing robot work. After ChatGPT was launched in 2023, I tried to let ChatGPT assume the role of a robot through language prompts for task planning, such as planning how the robot should use its sensors, identify the target first and then take action. At that time, it was already doing a relatively good job. Based on this, we completed a paper, which was the world's first research combining language models and humanoid robots.

In 2023, we completed the world's first work on combining large language models and robots, improving the alignment problem between the upper - layer language model planning and the lower - layer reinforcement learning strategy.

The second stage was inspired by Google. Around 2023, we started the research on the prototype of end - to - end VLA (Vision - Language - Action) and became one of the earliest domestic teams to reproduce RT - 2. Later, we found some problems in actual operation and proposed improvement methods, which is the well - known fast - slow system VLA framework.

In September 2024, we first proposed the VLA solution that adds a high - frequency action processing module on the basis of VLM. After the publication of this architecture, leading institutions in the industry, including Pi0 of Physical Intelligence (October 2024), Helix of Figure AI (February 2025), and Groot N1 of NVIDIA, have successively released VLA models with similar architectures.

RT - 2 is essentially a slow system that focuses on thinking. Although it can handle language, it lacks effective action processing. We added a fast system to output more refined actions in more detail and operate at a higher frequency.

The third stage was inspired by the emergence of the Sora video - generation model. Previously, to understand the physical world, such as using NVIDIA's simulator, one had to manually write physical laws, which was a complex process and difficult to accurately model. Sora can generate detailed videos, such as people walking, hands grasping objects, pouring water, etc. Robots need such a general - purpose world model.

Therefore, we considered introducing it into robots. Subsequently, we proposed a series of VLA algorithm frameworks that integrate generative models.

In September 2024, we released the PAD architecture, first proposing the integration of a world model, which was accepted by NeurIPS.

In December 2024, we released the VPP architecture, first proposing a pre - trained video prediction model and integrating it with the PAD architecture, which was accepted by ICML.

In September 2024, we proposed the iRe - VLA framework, first proving that reinforcement learning can be used to train end - to - end embodied large models and improve their performance.

In January 2025, we proposed UP - VLA, an embodied model that unifies understanding and prediction, integrating understanding, prediction, and strategy learning, and predicting future pictures and underlying actions at the same time, which was accepted by ICML.

Our model can not only predict the future but also directly control the specific actions of the robot end - to - end, including fine - tuning of each joint.

For example, when the robot places a cup on the edge, it will worry about it falling and make continuous predictions, which helps it prepare in advance. We have iterated several generations of large models and proposed the world's first VLA model that integrates generative world models.

As far as we know, several top foreign teams are also working on this. Last month, Meta used a similar method to integrate a world model.

Intelligent Emergence: It seems that you have made sufficient preparations in terms of models and algorithms. What is still lacking now?

Chen Jianyu: First of all, it is data.

When language models were first developed, there was already a large amount of human language data on the Internet. You only needed to scrape the data and do some processing. But for robots, there is naturally not so much data.

Waymo recently added their driving data in San Francisco. Although it is a large volume, it is far from the data volume of language models. If we collect data in this way, it may take tens of thousands of years to reach the training data volume of ChatGPT.

The flywheel effect is more difficult to achieve for robots than for autonomous driving because there are already many cars on the road.

Intelligent Emergence: Many companies are currently using real - machine teleoperation to generate data. Will you consider using this method?

Chen Jianyu: We use a combined approach. First, we conduct pre - training based on a very large amount of video data to train a relatively general - purpose base model. Then, we use more refined teleoperation data to adjust the target. This way, the demand for real - machine teleoperation data will be reduced, rather than relying directly on it for underlying pre - training.

Intelligent Emergence: For the robot "brain", what kind of data is really useful for the model?

Chen Jianyu: We need diverse data. Take driving as an example. If all the data is about perfect driving, the model may not be able to handle slightly dangerous situations. Therefore, it must cover a variety of different scenarios.

For example, when learning from videos of pouring water, we cannot always use the same cup in the same position. Different postures and the shape of the water cup will affect the liquid level. So, we may need more dimensions to increase the diversity.

In addition, not all situations are white - wall experimental scenarios. We also need to try different backgrounds. A large variety of data and a large amount of data collected for each type will be more effective.

Intelligent Emergence: Is it important for a robot to be human - like?

Chen Jianyu: The humanoid form is important. By training humanoid robots, you can obtain a powerful foundation, and then you can reduce the dimension of this foundation to other forms.

Although robots may have different forms in the future, a large part of the components are shared, including large models and joint modules, only with different sizes. The hardware technology is a unified set, and the software technology is also a unified set. Whether it is the robot's hand, bipedal, or wheeled form, different shapes actually use the same set of hardware - software combined base.

This is also the reason why we are developing humanoid robots. The humanoid form is not our ultimate goal but an important means. By combining with human behavior data, we can make better use of this data. This also echoes our method because we learn directly from a large amount of human video data.

Intelligent Emergence: A founder of a robot company once believed that the brain is not important, and the body is important. As long as there is a body, one doesn't care about the brain. What do you think?

Chen Jianyu: The premise of training AI is to have a body first, then continue to collect data, train, and adjust. So, it will inevitably lag behind the development of the body.

As a startup company, we consider "laying eggs along the way". After the body is developed, we can sell it first. The gross profit of our dexterous hand products is very high, and the marginal cost will also be reduced when developing humanoid robots. Now, we are gradually selling the whole machines on a large scale and have made preparations for mass production. Later, our models and solutions will also be gradually commercialized.

Image source: Company official

Intelligent Emergence: Can a company that only focuses on the brain be more capable than you in the future?

Chen Jianyu: I think that if a company only focuses on the brain, it will lack many things. The commercialization model will be incomplete, and there may be a lack of many ways to obtain resources. It may not be able to go further. A good business model can bring more resources, allowing more investment in R & D and better products. There is also a flywheel effect here. Through commercialization, data can be accumulated in advance, which may bring many benefits.

A company that only focuses on the brain has a high degree of uncertainty. If it uses multiple bodies, it needs to re - establish data connections for each body, which consumes a lot of energy and is difficult to scale up.

The popular VLA route: The "L" part is too heavy

Intelligent Emergence: The VLA route is the combination of large models and embodied intelligence, which has attracted the attention of scholars in the robot and