ByteDance and Kuaishou: Another Encounter in the AI Video Arena

Text by | Zhou Xinyu

Edited by | Su Jianxun

In mid - April 2025, Kuaishou and ByteDance, two old rivals, met again in the field of AI video generation.

First, on April 14, ByteDance's video generation foundation model, Seaweed, quietly launched its official website and released a technical report.

△ Videos generated by Seaweed. Source: Seaweed official website

“Using minimal effort to achieve maximum results” is the effect that ByteDance wants to achieve in the video field this time. The first - released model, Seaweed - 7B, not only uses 7 billion parameters to achieve better results than similar models with 14 billion parameters but also has a very high training efficiency: the training of similar models generally requires millions of GPU hours, while Seaweed - 7B only uses 665,000 H100 GPU hours.

Training efficiency of Seaweed - 7B.

Compared with ByteDance's low - key approach, Kuaishou wants to make a much bigger splash in the field of video generation.

On April 15, at the press conference, Gai Kun, the senior vice - president of Kuaishou and the person in charge of the main - station business and community science line, gave a very high - profile evaluation of Kuaishou's achievements in the field of video generation in front of hundreds of people present:

“‘Keling’ has sounded the challenge whistle for the entire video generation track.” “After us, various manufacturers have started to release video generation models.”

Indeed, Kuaishou's video generation model, “Keling”, launched on June 6, 2024, created a record of serving over 2.6 million users within three months of its launch among a bunch of “Sora futures” thanks to its generous free trial.

This was also the first encounter between ByteDance and Kuaishou in the video field. The release of “Keling” once put ByteDance in the position of a chaser. It wasn't until November 8, 2024, that ByteDance returned to the first echelon in the video field: Seaweed and PixelDance launched the video generation platform, Jimeng AI.

Many industry insiders' evaluation is that in 2024, in terms of video performance, ByteDance, which was catching up vigorously, finally tied with Kuaishou.

In this head - to - head competition a year later, Kuaishou obviously isn't willing to give up its position as the technological leader.

“Significantly leading globally.” “Continuously leading.” “Please allow me to repeat these two sentences again.”

When presenting the new results, Gai Kun's words heated up the atmosphere again. He announced Kuaishou's latest exploration results in the multimodal field: the image generation foundation model “Ketuku 2.0”, the video generation foundation model “Keling 2.0”, and the multimodal editing function MVL.

△ Videos generated by “Keling 2.0”. Source: Kuaishou

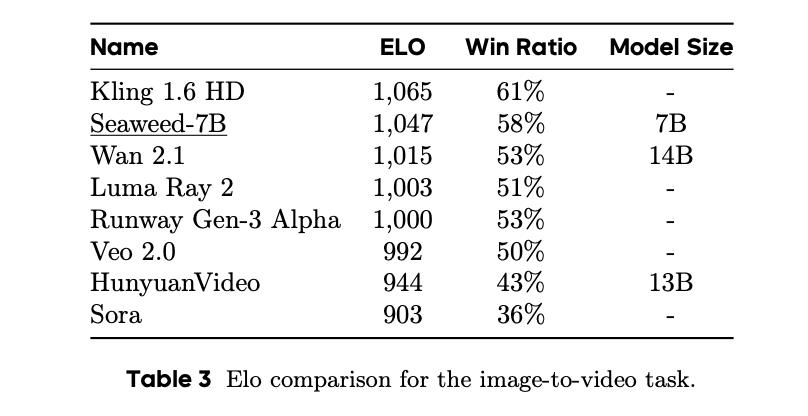

In terms of video generation ability, both the text - to - video and image - to - video abilities of “Keling 2.0” exceed Google's video model, Veo2. In the competition with the old rival Sora, the win - loss ratio of “Keling 2.0” text - to - video model even reaches 367%.

Abilities of “Keling 2.0”.

The disruption caused by DeepSeek has made the industry realize that the model determines the upper limit of AI products. In 2025, the actions of many manufacturers are: returning to the model.

ByteDance and Kuaishou currently have different ambitions for AI video generation products. It is understood that ByteDance's expectation for “Jimeng” is to create a Douyin in the AI era, a more ToC product in the future. Kuaishou, on the other hand, mainly pins its hopes for commercialization on the B - side.

Even so, in 2025, the consensus of the two manufacturers is still: polishing the video foundation model and seizing a place in the first echelon.

According to LatePost, one of the OKRs of Zhang Nan, the person in charge of ByteDance's image and video creation platform “Jimeng” in 2025, is to focus on polishing the model effect. The goal of the “Keling” team in 2025 is also condensed in the four words spoken by Gai Kun: “Continuously leading”.

For ByteDance and Kuaishou, at least on the technical level, the fire in the field of video generation will only burn more vigorously.

Competing in performance and implementation

This “encounter” in April is by no means just a competition of video model performance for ByteDance and Kuaishou.

The difficulty of implementing video models has long been a well - known dilemma. Besides the model effect falling short of expectations, high cost is a common problem of video models. A typical example is that in order to cover the high inference cost, OpenAI Sora's subscription fee is as high as $200.

It can be seen that compared with the “show - off” year of 2024, the video model arena in 2025 emphasizes practicality and affordability more.

For example, although ByteDance's Seaweed - 7B is slightly inferior to Kuaishou's previous - generation model “Keling 1.6” in performance, it has a very low deployment cost: a single GPU with only 40GB of video memory can generate high - resolution (1280x720) videos.

This means that small and medium - sized teams and individual creators can also afford AI video creation.

In terms of practicality, the consensus of ByteDance and Kuaishou is that a single video generation model currently cannot meet users' creative needs.

At the press conference of “Keling 2.0”, Kuaishou also released the image generation model “Ketuku 2.0”. This model, which has enhanced instruction - following ability and aesthetic ability, has surpassed three mainstream models, Midjourney v7, Rave, and FLUX1.1 pro, in the arena.

△ Images generated by “Ketuku 2.0”. Prompt: A banquet hall filled with white tables, and people sitting around are enjoying a delicious meal. Source: Kuaishou

In the view of Zhang Di, the vice - president of Kuaishou Technology and the person in charge of Keling AI, image ability is an indispensable step in the implementation of video models.

He cited a set of data: in the videos generated by “Keling”, image - to - video accounts for 85%. The high proportion of image - to - video shows that in the actual process of video creation, more users prefer to use pictures to determine the style and add key frames to obtain more stable video generation results.

The “multimodal editing MVL function” released by “Keling” this time, according to the staff, is also to meet the real - time video editing needs of creators.

Multimodal editing MVL function.

The multimodal editing MVL function supports not only text prompts but also action description files in image and video modalities. For example, users can add characters from a new video to an existing video by uploading the new video.

While Kuaishou focuses on images, ByteDance gives full play to its advantage in text processing. Seaweed - 7B combines ByteDance's “long - context tuning technology” and the long - narrative video generation technology “VideoAuteur” to ensure that the generated videos are consistent based on users' global text descriptions and shot descriptions.

“The hope of the whole village” and “Leaving no one behind”

ByteDance and Kuaishou have the same ambition to reach the top in the video field, but the business situations they reflect are different.

Beyond the well - known story of “elite combat”, the birth and popularity of “Keling” in Kuaishou's business development trajectory have a certain degree of contingency.

According to Intelligence Emergence, it wasn't until early 2024 that a key technology for text - to - video was cracked by the Keling team. In March 2024, Gai Kun saw the demo of “Keling” for the first time.

The company's commercialization expectations for AI were originally not high. Intelligence Emergence learned that in the Q4 2024 OKR of Wang Jianwei (Thomas), the person in charge of Kuaishou's commercialization business, “AI commercialization” was not an “O (Objectives)” but just a “kr (Key Results)” under “Growth”.

Kuaishou hasn't had a new story for a long time. As an Internet company that also grew up through short - video business, Kuaishou's business map is not as extensive as ByteDance's, which has been constantly expanding its territory. Until 2023, the core of Kuaishou's revenue still revolved around online marketing, live - streaming, and e - commerce on its short - video platform.

However, the emergence of “Keling” has allowed Kuaishou to see a new growth curve beyond short - videos.

Kuaishou's financial report shows that from the opening of API services in September 2024 to February 2025, the cumulative revenue of Keling AI has exceeded 100 million yuan. At the user level, Gai Kun revealed at this press conference that currently “Keling” has 22.23 million users, the monthly active users have increased by 25 times, and the number of global enterprise and developer customers has also exceeded 150,000.

Not only is “Keling” self - sustaining, but it is also driving other businesses of Kuaishou. An employee of Kuaishou's commercial marketing service platform “Magnetic Engine” once told Intelligence Emergence that “Keling” has brought obvious growth to Kuaishou's advertising business:

“Large customers spend more than 100,000 yuan a month on advertising. The advertising materials can be generated by AI. A video editor can edit at most 10 advertising materials a day, but Keling can generate thousands of them. We can distribute thousands of advertising materials to users' recommendation streams through algorithms within a day.”

The release of “Keling 2.0” is a continuation of “the hope of the whole village” for Kuaishou.

Compared with Kuaishou, which makes a surprise attack with a single point, ByteDance has a heavier burden of being a good student in the video generation track.

In the past two years, ByteDance's heavy investment in the AI field in terms of personnel, computing power, and money is obvious to all. However, there is DeepSeek in the text model field, Keling in the video model field, and it is in a close competition with MiniMax's Conch AI in the voice model field - ByteDance has grasped everything but seems to have grasped nothing firmly.

After a painful reflection, since the beginning of spring in 2025, a reform has been launched within ByteDance. In March 2025, Wu Yonghui, the new leader of the AI department “Seed” and a former Google Fellow, mentioned at the department's all - staff meeting that the organizational culture should be further strengthened to create an open, inclusive, and confident team research atmosphere; the degree of technological openness should be improved.

The release of Seaweed - 7B is a footnote to ByteDance's AI reform.

Following the first public release of the text - to - image technical report by Doubao in March, Seaweed has also become the first model in the video field for which ByteDance has publicly released a technical report. It is worth noting that the research team, including Jiang Lu, Feng Jiashi, Yang Zhenheng, and Yang Jianchao, which was once regarded as a secret by ByteDance, also stepped onto the stage for the first time as co - authors of the technical report.

After regrouping, the competition between the two sides has just begun.

Welcome to communicate!