Not following the common path of Transformer, "Yuanshi Intelligence RWKV" secures tens of millions of angel round financing | Exclusive by 36Kr.

36Kr has learned that the large model architecture innovation company, YuanShi Intelligence (RWKV), completed a tens of millions of RMB angel round of financing in December. The investor is Tianji Capital. After this round of financing, the company's valuation has doubled compared to the previous seed round. This round of financing will mainly be used for team expansion, new architecture iteration, and product commercialization.

It has been more than two years since ChatGPT under OpenAI was released in November 2022 and set off a global generative AI wave. The Transformer architecture and Scaling Law that support ChatGPT are the main technical development lines of this revolution.

In short, the reason why large language models (LLM) can emerge with intelligence is that the parameter scale of AI models has been expanded from the original hundreds of millions to the current hundreds of billions and trillions. After learning enough data, the model has emerged with intelligence.

However, large models also have their own "Achilles' heel" - hallucinations and accuracy are almost impossible to completely solve. In the just-passed 2024, as the iteration of large models slows down, both the academic and industrial communities have ushered in a major discussion on the Transformer architecture and Scaling Law (referring to the increase in computing power and data scale, and the corresponding improvement in model performance to obtain more intelligence).

The establishment of YuanShi Intelligence (RWKV) is precisely hoping to explore a new path that can surpass the Transformer architecture. "We are not only a large model company, but also a 'black technology' company with the ability to continuously achieve innovation in the underlying architecture of AI models," said Luo Xuan, the co-founder of YuanShi Intelligence.

Peng Bo, the founder of RWKV, graduated from the Department of Physics at the University of Hong Kong and was once a quantitative trading expert. Since 2020, Peng Bo has chosen to independently develop this innovative architecture and open-source project, RWKV. At the end of 2022, RWKV released its first model. By June 2023, when the commercial company was officially established, the team has grown from the initial three people to nearly 20 people.

Unlike the Transformer architecture that relies on a huge amount of computing power and data, RWKV has chosen a technical route that pays more attention to efficiency and flexibility.

"Simply put, the current mainstream Transformer architecture is equivalent to that in each conversation, for each Token output by the model, it needs to'read' the entire previous text from the beginning, and it needs to always record the state of each token in the previous text (that is, KV Cache)." said Luo Xuan, the co-founder of YuanShi Intelligence. This also determines that the Transformer is not an efficient information processing architecture and requires a lot of computing power.

But the biggest technical breakthrough of RWKV is that the model does not need to always record the state of each Token - that is, it does not need to "read the entire text from the beginning and then give a reply" for each conversation, greatly reducing the amount of calculation. This is equivalent to combining the efficient parallel training of the Transformer with the efficient reasoning ability of the RNN.

RNN (Recurrent Neural Network) is not a new technology. Although its reasoning efficiency is higher than that of the Transformer, before RWKV, it was generally believed that the ability of RNN is weaker than that of the Transformer. However, the emergence of RWKV has proved that the improved RNN not only maintains a higher efficiency than the Transformer, but also has a strong language modeling ability.

However, the price of higher efficiency is that as an RNN with a fixed state space size, it is impossible to compress the entire infinitely long previous text into the state space. That is to say, RWKV will gradually forget the "details that the model automatically judges as forgettable" (for the details that the model automatically judges as important, the model will remember them persistently), which is equivalent to answering questions after reading the previous text once without repeatedly reading the previous text.

Peng Bo believes that this is not a defect of the RWKV architecture. Just as the human brain itself does not have a perfect memory, but humans can also have a perfect memory through a small amount of repetition and external memory. RWKV can introduce the method of RL (Reinforcement Learning) to automatically determine to re-read the previous text when necessary, which is much more efficient than the Transformer's "forcing to remember everything".

At the same time, the characteristics of RWKV are also conducive to its application and implementation in some scenarios, such as creative scenarios such as writing and music generation, where the results produced by the model will be more innovative and have a weaker "AI flavor".

"In creative fields such as music generation, the architecture of RWKV is closer to the memory deduction mechanism of the human brain. It is not a simple retrieval of past information, but a 'deduction' through continuous update and reorganization to generate new content," Luo Xuan explained.

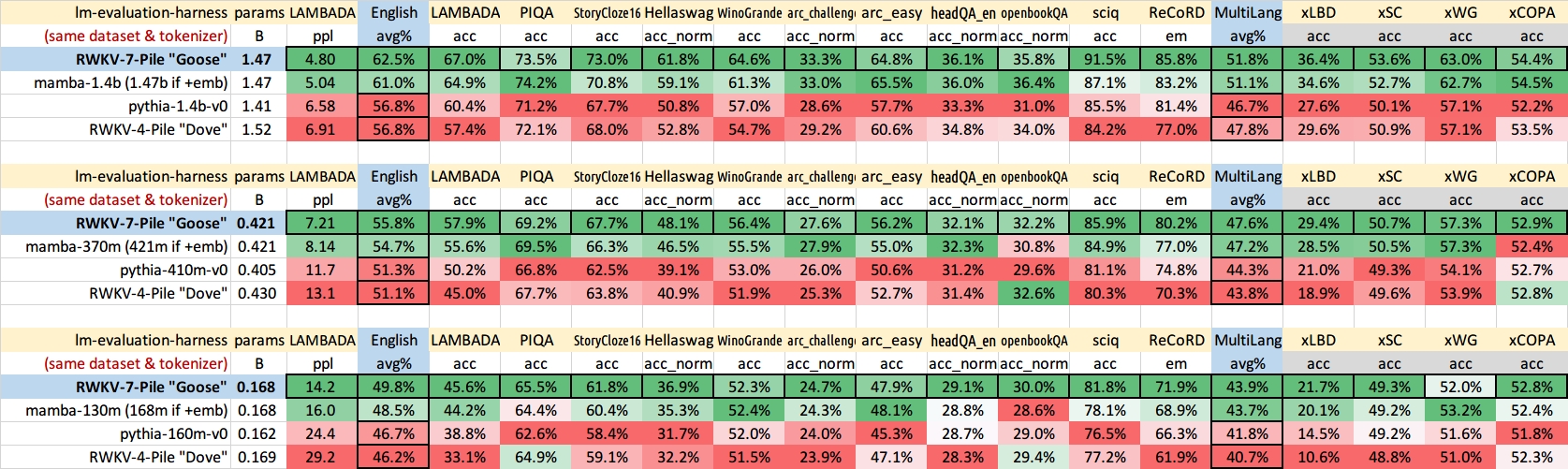

Currently, RWKV has completed model training from 0.1B to 14B, and the overseas community has released a 32B preview model. In the past two years, RWKV has also achieved important technical breakthroughs: the architecture has gradually iterated from RWKV-4 to RWKV-7.

The newly released RWKV-7 model can comprehensively surpass the performance of the Transformer architecture under the same parameter scale. This advantage is reflected in multiple dimensions: For example, in terms of model learning efficiency, RWKV-7 can improve accuracy more quickly than a fully optimized Transformer architecture. And with the same parameters and training data, in core benchmarks such as English and multilingual tests, RWKV-7 can also perform better.

Source: RWKV

The memory of RWKV-7 is also significantly stronger than that of the previous RWKV. For example, 0.1B of RWKV-7 trained in a 4k context window can automatically solve the needle-in-a-haystack problem of 16k.

"The RNN-like architecture adopted by RWKV is closer to the operation mode of the human brain and the universe. Through an efficient information compression mechanism, the model can achieve continuous learning and evolution under limited resources," Luo Xuan said.

Continuous learning is also an important technical breakthrough of the RWKV-7 version. Compared to the "training - inference separation" mechanism adopted by the mainstream models, RWKV enables the model to "learn while inferring" and better learn the patterns in the previous text.

The efficient reasoning mechanism of RWKV is quite suitable for use in scenarios such as small models and the edge side - although large models have strong performance, they still face many constraints at the computing level: Whether it is a mobile phone or a computer, if the hardware level does not have a sufficiently powerful computing unit, there is no way to make the model run locally, but to rely on cloud computing, which reduces the user experience.

Currently, the company's business of YuanShi Intelligence is divided into two major parts. One is to open-source the model, and this part will continue to be fully open-source and free - on GitHub, the core open-source project RWKV-LM of RWKV has received more than 12,900 stars, and a developer ecosystem has been gradually established. Currently, many universities and companies including Tencent, Alibaba, Zhejiang University, and Southern University of Science and Technology have used RWKV; the second is the commercial entity. In 2024, RWKV made many attempts on the product side, covering both To B and To C.

On the software side, RWKV has launched an AI music generation application for the C-end market. In the To B field, YuanShi Intelligence has chosen two major fields, embodied intelligence and new energy, to provide model authorization for enterprises. Currently, the cooperative customers that have been reached include State Grid, Youlu Robot and other enterprises.

In the future, YuanShi Intelligence plans to launch the RWKV-7 with parameters of 70B and above and a terminal deployment solution in 2025, and explore larger-scale models by combining a new reasoning framework and a new chip. Luo Xuan said that with the current shift of Scaling Laws, it is expected that the outbreak period of the new architecture will come in the first half of 2025, and YuanShi Intelligence will also accelerate commercialization.

Welcome to follow