OpenAI releases the o1 model: The error rate is reduced by 34%, the speed is increased by 50%, and Pro members with a monthly payment of $200 can make unlimited calls | Frontline

Written by | Tian Zhe

Edited by | Su Jianxun

At 2 a.m. on December 6, OpenAI kicked off the first live stream of the 12-day working day. In this live stream, OpenAI launched the o1 model and the new subscription service ChatGPT Pro. From today, the o1 model will replace the o1-preview model, and both ChatGPT Plus and Pro subscribers can use it.

In September this year, OpenAI officially launched the o1 large model, which can answer complex questions in fields such as science, code, and mathematics. However, at that time, only two versions, o1-preview and o1 mini, were launched, and the full capabilities of the o1 model were not unlocked. The o1 model launched at this conference has improved in terms of intelligence, multimodal input, and thinking speed.

Sam Altman, co-founder and CEO of OpenAI, said that compared to o1-preview, the significant error rate of the o1 model has decreased by approximately 34%, and the thinking speed has increased by approximately 50%.

He introduced that what makes o1 unique is that it thinks before answering each time, which enables it to provide more detailed and correct answers than other models.

The intelligence of o1 is reflected in a higher accuracy rate for complex problems such as mathematics. It is reported that the accuracy rates of the o1 model in answering the AIME 2024 mathematics competition and doctoral-level scientific questions reached 78.3% and 75.6% respectively, which are 28.3% and 1.5% higher than those of the o1-Preview.

In terms of response speed, o1 has also improved. In the offline test, the average response speed of o1 is about 60% faster than that of the O1 preview version. OpenAI employees raised a question in the live stream, asking o1 and o1-Preview to list the Roman emperors of the 2nd century AD and briefly describe their lives. The results showed that o1 only took about 14 seconds to answer, while o1-Preview took 33 seconds.

OpenAI also noticed that the model previously had a longer response time for all questions, and they have fixed this problem. Now, if you ask a simple question, o1 will answer quickly; if you ask a complex question, it will take longer to think.

In addition, o1 has added a multimodal input capability that can handle both image and text content simultaneously and perform reasoning.

OpenAI showed an A4 paper with a hand-drawn sketch of objects such as the sun, cooling system, and different values, and took a photo and uploaded it to o1. Without any prompts, o1 generated the questions that the user might want to ask and answered them automatically. Then, in less than 10 seconds, o1 not only successfully understood the problem requirements of the sketch but also realized the parameters that were not provided and gave the correct answer through self-reasoning.

Considering the users' need to use the model without call restrictions, OpenAI has launched a new subscription mechanism, ChatGPT Pro. Users can pay $200 per month to use the stronger o1 model - o1 Pro - without restrictions.

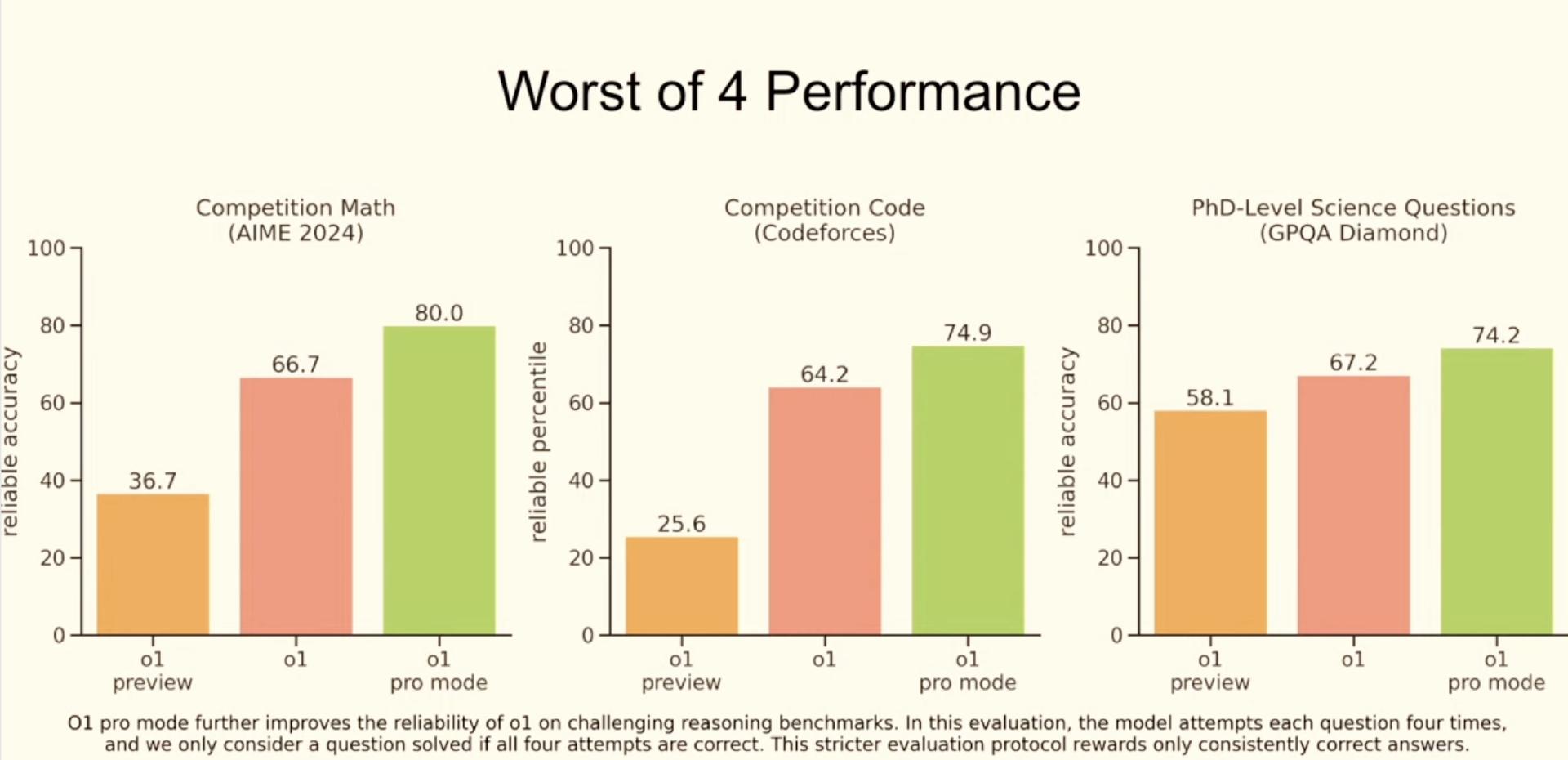

OpenAI demonstrated the differences in answers between o1-Preview, o1, and o1 Pro in mathematics competitions, code competitions, and doctoral-level scientific questions. After asking each question four times, the reliable accuracy rate of o1 Pro is the highest, which are 80.0%, 74.9%, and 74.2% respectively.

Source: OpenAI

In the live stream, OpenAI raised a chemical question that o1-Preview answered incorrectly and asked o1 Pro to find proteins that meet specific criteria. The results showed that o1 Pro completed the answer in only 53 seconds and allowed users to view the thinking process.

OpenAI said that they plan to enable o1 Pro to support more computationally intensive tasks, allowing it to handle longer and more complex tasks. In addition, o1 Pro will also add web browsing, file upload, and enhanced API support (such as structured output, function calls, and image understanding) functions.