Ni Xiaolin from "Houmo Intelligence": The next decade belongs to the era of large models, and NPU will reshape all edge scenarios | WISE2024 King of Business Conference

From November 28 to 29, the two-day 36Kr WISE2024 Business King Conference was grandly held in Beijing. As an all-star event in the Chinese business field, the WISE Conference is already in its twelfth session this year, witnessing the resilience and potential of Chinese business in an ever-changing era.

2024 is a year that is somewhat ambiguous and has more changes than stability. Compared to the past decade, everyone's pace is slowing down, and development is more rational. 2024 is also a year to seek new economic momentum, and new industrial changes have put forward higher requirements for the adaptability of each subject. This year's WISE Conference takes "Hard But Right Thing" as the theme. In 2024, what is the right thing has become a topic we want to discuss more.

On that day, Ni Xiaolin, Vice President of "Houmo Intelligence", delivered a keynote speech, sharing the unlimited possibilities and far-reaching impacts behind AI and NPU.

The following is the speech content (edited and compiled by 36Kr)

Ni Xiaolin: Dear guests, hello!

I am Ni Xiaolin from "Houmo Intelligence", and "Houmo Intelligence" is an AI chip company based on the integration of storage and computing. Today, every session is talking about AI. Various AI large models, AI devices, and AI application scenarios are developing rapidly. As a participant and witness of the AI era, we feel extremely honored. Next, I will share with you some views of Houmo Intelligence on the changes in the demand for AI computing power on the edge side.

Houmo Intelligence

In November 2022, OpenAI released ChatGPT3.5, marking the arrival of the AI 2.0 era; in 2023, a large number of local large models were released. AI began to develop rapidly along two paths simultaneously. Cloud models continue to evolve forward along the scaling law, with the model scale and parameters continuing to increase, constantly exploring the boundaries of general intelligence. For example, the parameters of GPT4 released last year are as high as 1500B, that is, 1.5 trillion parameters. However, while pursuing high generality and high intelligence, it also brings extremely high investment and high operating costs. At present, a large number of players have begun to withdraw from this escalating track.

At the same time, on the edge side, more models suitable for local deployment, such as 7B, 13B, and 30B, have emerged. Compared with large-scale general intelligence, these models are more suitable for entering thousands of industries to solve various practical problems. Compared with the "thousands of models competing and hundreds of models contending" in the cloud, the edge side undoubtedly has a larger application scale. Every year, the number of new intelligent devices globally reaches billions, and the scale and prospects of the global edge side are even greater.

In addition, AI on the edge side has the advantages of being more personalized, having low latency and real-time performance, and data privacy. For example, AI PC has an exclusive Agent that knows your personal information and a local database established based on your historical documents.

Of course, at present, we see more discussions about cloud models, and edge side models have not yet been popularized. I think in addition to these software factors, another very important influence is hardware. The hardware requirements on the edge side are different from those on the cloud, and can be summarized as "three highs and three lows": high computing power, high bandwidth, high precision, low power consumption, low latency, and low cost.

Obviously, CPU cannot meet all the requirements. Although GPU can meet the three highs, its high cost and high power consumption greatly limit the popular use of edge side devices. For example, if we run a 30B model locally, we need a 4090 graphics card, but the cost of nearly 20,000 yuan and the power consumption of nearly 500W make it unbearable for the vast majority of devices.

Unlike the cloud, where the "training" scenario is the main one, large models on the edge side are basically based on the "inference" scenario. The NPU specially designed for local large models is believed to be more suitable for use on the edge side. For example, we can achieve the same computing power as GPU with one-tenth of the power consumption. At the same time, NPU comes with a large memory and can run smoothly and independently without occupying system memory through the system bus. The cost of NPU is also much better than that of GPU, making it possible to add AI to existing devices.

Facing the demands of high bandwidth and low power consumption of AI on edge devices, the traditional von Neumann architecture has faced huge challenges, especially the storage wall and power consumption wall, which many enterprises have been struggling with.

The storage wall refers to the fact that the limited bus bandwidth severely limits the speed of data transmission.

The power consumption wall refers to the fact that more than 90% of the power consumption is consumed in the transportation of data, rather than the truly required calculation and processing.

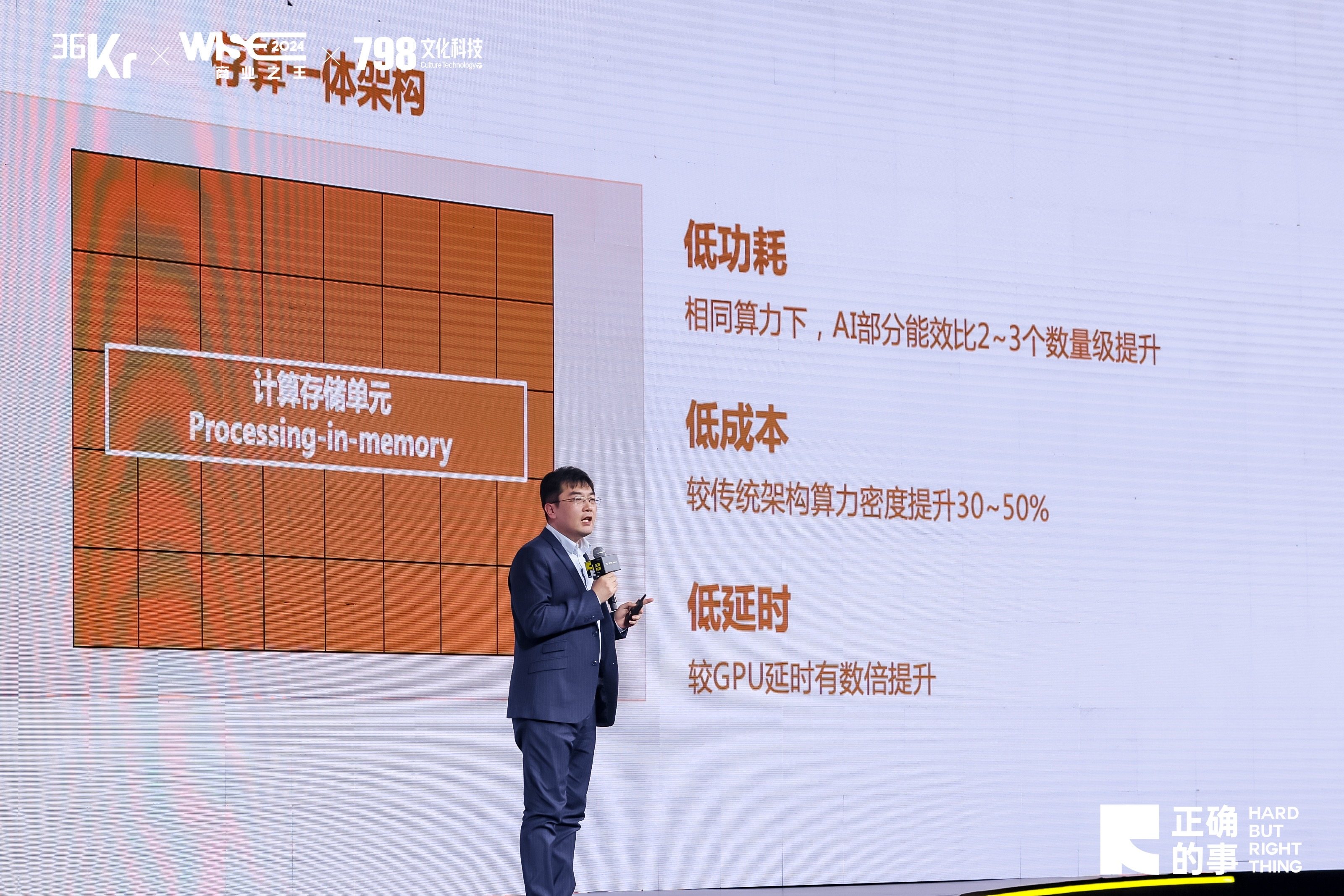

Facing these two high walls, Houmo Intelligence adopts a new architecture of the integration of storage and computing, achieving a high degree of integration of storage units and computing units. Computing is directly completed in the storage units, resulting in a significant reduction in power consumption and a significant increase in bandwidth.

The AI chip with the integration of storage and computing has achieved a 2-3 orders of magnitude improvement in AI energy efficiency ratio. The computing power density has increased by up to 50%, and the latency is several times higher than that of traditional architecture chips. These characteristics are very suitable for the needs of large AI models on the edge side.

Houmo Intelligence

Currently, Houmo Intelligence has independently developed two generations of the integration of storage and computing chip architecture, and has carried out special designs for LLM-type large models.

It is mainly reflected in:

1. Based on the self-developed IPU architecture of the integration of storage and computing, it provides highly parallel floating-point and integer computing power. The self-developed SFU supports a variety of nonlinear operators; the self-developed RVV multi-core provides a super-large general computing power, which can flexibly support various LLM/CV algorithms; the self-developed C2C interface has the characteristics of multi-chip cascade expansion to achieve the deployment of larger models.

2. The supporting Houmo Avenue software toolchain is simple and easy to use and is compatible with general programming languages. The operator library adapted to the storage and computing architecture can efficiently utilize the performance/power consumption advantages brought by the storage and computing IP to improve the deployment and launch time.

In 2023 and 2024, we have respectively launched two NPU chips, Houmo Hongtu®️ H30 and Houmo Manjie®️ M30, fully demonstrating the huge advantages of the integration of storage and computing architecture in computing power and power consumption. Taking M30 as an example, it has a powerful computing power of 100Tops and only requires a power consumption of 12W.

Here is an advance notice. In 2025, Houmo will launch the latest chip based on the new-generation "Tianxuan" architecture, and its performance will be significantly improved once again. We believe that this chip can accelerate the process of deploying large models on edge devices.

Houmo Intelligence

In order to facilitate the rapid deployment of AI device solution providers and manufacturers, we not only provide chips but also provide a variety of standardized product forms, including Limou®️ LM30 intelligent accelerator card (PCIe), Limou®️ SM30 computing module (SoM), etc. For various existing terminal devices, by adding an NPU through a standard interface, the smooth operation of the local AI large model can be realized.

The rise of the mobile Internet in 2009 turned our mobile phones from feature phones into smart phones. The outbreak of the Internet of Things in 2016 made more and more devices around us become smart devices. These smart devices, on the existing solutions, will evolve again through the +AI + NPU method and become AI large model-enabled devices.

For example, for PCs, we have seen that Lenovo has been promoting AI PCs on a large scale. Automotive AI cockpits, AI TVs, AI conference large screens, AI embodied intelligent robots, etc., they will become assistants, secretaries, drivers, copywriters, graphic designers, programmers, tutors, etc. that understand you better, are smarter, and more efficient, providing us with various services. We hope that through the AI chips of Houmo Intelligence, we can help everyone achieve a rapid upgrade.

From 1999 to 2008, the Internet made almost all industries in China redo themselves; from 2009 to 2018, the mobile Internet made almost all industries in China redo themselves again. We believe that in the AI large model era in the next 10 years, NPU will reshape all edge scenarios, and all edge devices will be redone again!

We hope to communicate more with all AI enterprises and AI ecosystem partners here. Let's work together and cooperate to enable existing devices and various new devices that will be born in the future to run AI large models smoothly. We look forward to working together to create a new era of AI. Thank you all!