After Sora made a bold challenge and gained popularity, how can Luma AI handle the massive traffic? | 36Kr Exclusive Interview

Written by Zhou Xinyu

Edited by Su Jianxun

The story of Luma AI challenging OpenAI in the video field is like Durant suddenly switching to playing tennis and defeating Nadal, the tennis men's singles Grand Slam winner.

Recently, this Silicon Valley AI company founded in 2021, in the communication with "Intelligent Emergence", reviewed the process of the explosion of the video generation model Dream Machine AI.

It is not easy for a startup company to be the first in Silicon Valley to release a video model that can be compared to OpenAI Sora. Moreover, Luma AI can be regarded as a "latecomer" in the field of video generation:

Before 2024, this was a small company mainly focusing on 3D generation with a scale of about 10 people. Barkley Dai, the head of data products at Luma AI, told "Intelligent Emergence" that after deciding to transform into video generation in December 2023, the team only then expanded the talents in the video field, and the scale increased to 50 people.

He mentioned that for Luma AI to become an ant army in the field of video generation, technical strength, release timing, and operational strategies are all indispensable.

Talents are the most important asset that this company believes is crucial for making a video model. After deciding to transform from 3D to video generation in December 2023, Luma AI absorbed 40 talents in the AI field.

And to compete with OpenAI and Google, Luma AI has made many optimizations to the algorithm and Infra of the model. Barkley told "Intelligent Emergence" that the team, based on the same DiT architecture as Sora, adopted an exclusively improved architecture to ensure the generation effect while also saving training and inference costs.

The release time of the video model "Dream Machine AI" is June 13, 2024, which hit the blank spot in the video model track - this also makes Dream Machine AI scarce. Except for Kuaishou's "Keling", this is the only video model that is truly open to the public;

And the "limited free" strategy of the model immediately attracted a large number of trial users: within 4 days of its launch, the number of users of Dream Machine AI exceeded one million. Barkley disclosed to "Intelligent Emergence" that The investment cost of Dream Machine AI is 0, relying entirely on the spontaneous promotion by KOLs and the word-of-mouth fermentation of users.

After the explosion, improving user retention and preventing the brilliance from being a flash in the pan is the proposition that Luma AI faces now.

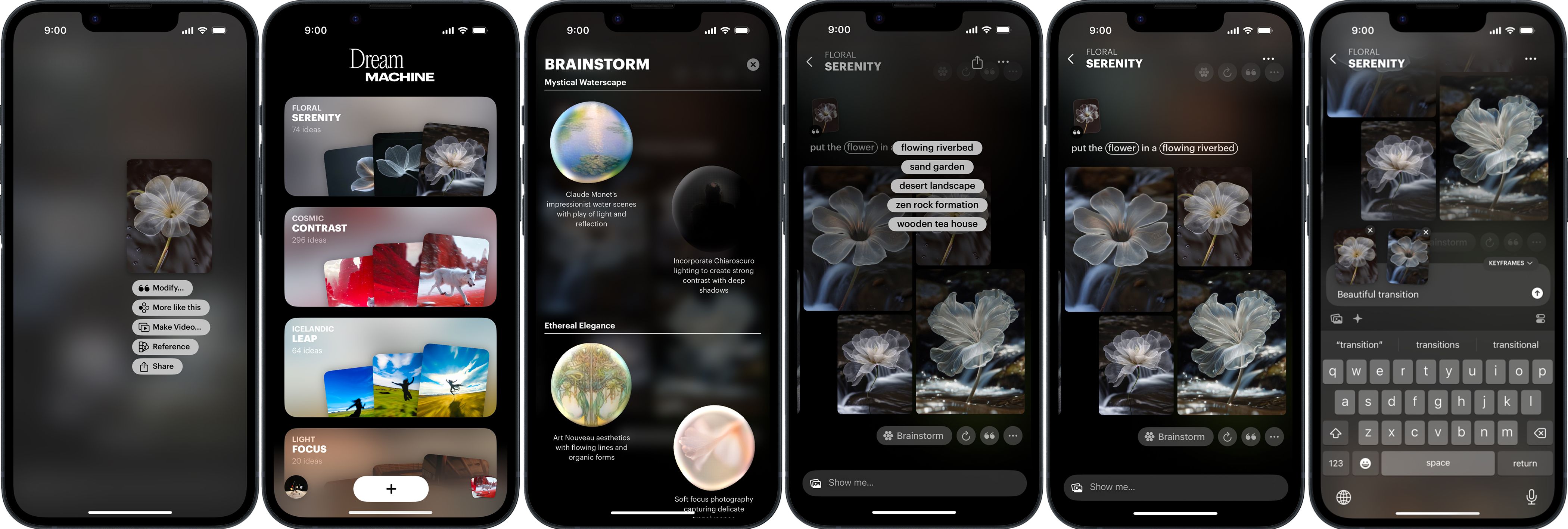

On November 26, 2024, nearly 6 months after the video model was released, Luma AI launched the Dream Machine AI creative platform on iOS and the web. Along with it, Luma AI also released its first self-developed image generation model, Luma Photon.

△The iOS interface of Dream Machine.

Luma AI product designer Jiacheng Yang (Yang Jiacheng) told "Intelligent Emergence" that unlike professional design tools such as Midjourney and Adobe, Dream Machine does not require users to learn how to write Prompts (prompt words) or to understand design. "Our goal is to create an AI visual tool that both AI novices and design novices can easily handle."

According to him, Dream Machine has 5 core functions:

(1) Conduct conversations in natural language to create and edit images;

(2) Provide creative ideas by AI. According to the Prompt entered by the user, automatically provide creative and style options;

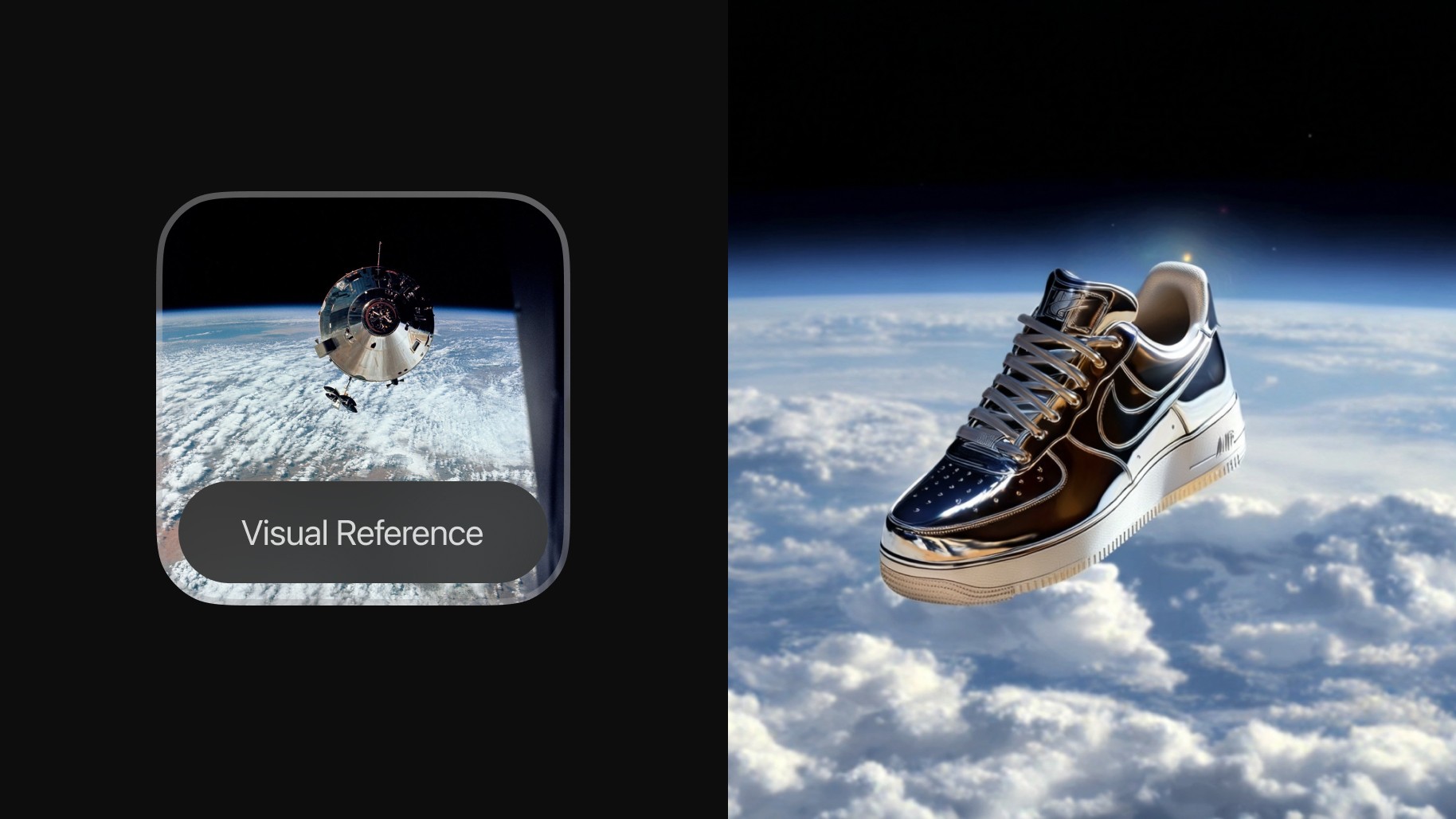

(3) Visual reference. Generate images with the same subject or style based on the photos entered by the user;

(4) Convert the images designed by AI into videos for users to view the details of the subject in the image presented from different angles;

(5) Publish all AI-generated materials on the panel and generate a shareable link to facilitate team brainstorming.

△The visual reference function of Dream Machine.

Why choose to use an image design platform to accommodate the users of the video model? "To expand the user base in the AI visual field, only video generation is not enough. The application scenarios of image generation will be more extensive, so we want to create an easy-to-use design platform. While users can easily handle it, it can also demonstrate our model capabilities." Barkley mentioned.

Industry competition is a problem that Luma AI, as a startup, has to face. They believe that creating a differentiated advantage is the key to making the model and product recognizable and attracting customers in the industry.

For example, in the face of competition from image products such as Midjourney, Dream Machine has made the language understanding ability reach the "ceiling". Moreover, this is also a model that is best at designing fonts - compared to the pictures with text generated by Midjourney and GPT, the design sense and clarity of the text in Dream Machine pictures are the highest.

△The generated captions in the pictures by Dream Machine.

Like the video model, Luma AI's investment budget for Dream Machine is 0. In Barkley's view, burning money for marketing depends on the rate of return, which means that ultimately, the product itself has to speak. And, "The AI market is still small. I think it is too early for AI companies to burn money for marketing. It is better to invest the marketing money in product research and development."

The following is the communication between "Intelligent Emergence" and Barkley Dai, the growth head of Luma AI, and Jiacheng Yang, the product designer of Luma AI. The content has been slightly edited by "Intelligent Emergence":

Burning Money for Marketing is Still Premature for AI Companies

"Intelligent Emergence": When the video model Dream Machine was released in June 2024, did the team expect it to become so popular?

Barkley: Actually, it exceeded our expectations. We once had a situation where the server and GPU resources could not bear it.

"Intelligent Emergence": If you want to summarize the experience of its popularity, what do you think it is?

Barkley: Actually, the earliest released version was not the version with the best effect. But we decided to make it fully free for all users to use.

At that time, no video model could do this. So it attracted a lot of users' attention in a short period of time.

"Intelligent Emergence": Isn't it quite difficult for a startup company to make a decision to be free?

Barkley: Actually, we also set a free quota at that time. I think this is a standard practice in the industry.

It's just that the peak at that time was too high for us. A large number of users flocked in a short period of time, and the server backend received too many requests.

"Intelligent Emergence": Can the company afford the inference cost brought by the traffic?

Barkley: Actually, we have done a lot of cost optimizations at the technical level, such as continuously improving the speed of video generation. At the beginning, our model needed 120 seconds to generate a 5-second video, but now it only needs 20 seconds.

And There is still a lot of room for optimization in the inference of the video model while maintaining the original generation quality. So in half a year, the cost of the video model is decreasing.

So I think the inference cost is not a particularly big burden for us. Of course, it is an expense, but it will be lower in the future.

"Intelligent Emergence": You mentioned that Dream Machine has a free quota. Then what is the user payment rate after the free quota is used up?

Barkley: To be honest, we have no expectations for the payment rate at all. Because at that time, our positioning of Dream Machine was a product to educate users and let them know how great the potential of Luma AI video generation is. At that time, there was no video model on the market that was released at the level comparable to Sora, so we had no benchmark for the payment rate.

But now for the AI design platform that is released, our positioning is a product that ultimately acquires customers. So now we have higher expectations for its revenue and payment rate.

"Intelligent Emergence": How much has been invested in marketing for Dream Machine?

Barkley: 0. We did not make any marketing payment when it was released.

Of course, we contacted many creators in advance. After they tried it, they were very excited. Even most of them had used Runway before, and some had used Keling. But after they used our product, they all felt that "This is the next big thing" and spontaneously promoted us on Twitter.

But we did not do any placement because we still firmly believe that the success factor is the product itself.

"Intelligent Emergence": Is the practice of burning money for marketing common among Silicon Valley AI companies?

Barkley: I feel that most of Silicon Valley is more product-driven, and the operation is mainly for Chinese companies.

The visual field market is still small. I think it is too early for AI companies to burn money for marketing. Even though ChatGPT has many users, for some visual models, the number of users is still very small.

At this time, if you do investment and try to seize the market, the retention rate will definitely not be high. It is better to invest this money in the research and development of the model and product to attract the growth of users with a better model and product.

"Intelligent Emergence": Before the release of the video model, Luma AI's technology and products were still focused on 3D generation. When did the team decide to make a video generation model?

Barkley: Around December 2023.

"Intelligent Emergence": Why did you shift from 3D to making video and image models?

Barkley: Actually, we wouldn't say that we are a 3D company. The positioning of the company is still an AI company in the visual field. We want to understand how the visual structure of the world helps AI understand the world.

From the research background of the founding team, 3D was something that Luma AI was more proficient in than the vast majority of companies and teams at the beginning. Subsequently, we did indeed make many technical breakthroughs in 3D generation.

However, the data magnitude that can be used for training in 3D is much less compared to pictures and videos. At the same time, in terms of usage scenarios, mobile phones and computers are still the main product carriers at present, but 3D is more limited than video.

But when we have more computing power, more talents, and more capabilities to advance our vision, that is, to better understand the world, we will naturally shift from 3D to making videos.

"Intelligent Emergence": Wouldn't this make the company seem strategically unstable?

Barkley: From my perspective as an internal member, I think whether it is 3D or video generation, it has always been reasonable.

Because whether it is 3D, video, or pictures, they are just a modality. If our ultimate goal is to understand the world, then whether it is a modality, a generation, or a creative exertion, I think as long as the goal remains the same, these media are just means to help us achieve the goal.

"Intelligent Emergence": From the transformation from 3D to video generation, did you encounter any difficulties during the period?

Barkley: I think the entire process is relatively smooth. Because when we were doing 3D generation, the team was only about a dozen people in size. But when we started doing video generation, we introduced many talents in the video field, and now the team size has exceeded 50 people.

This process is actually to absorb more new members to advance the realization of the goal, rather than saying that everyone is frequently changing directions. It's just that the people who used to do 3D are now gradually starting to do video-related work, such as data and other aspects.

"Intelligent Emergence": Is the experience of doing 3D helpful for video generation? Many feedbacks say that the motion trajectory of Dream Machine is very well done. Is this related to the spatial understanding ability accumulated from 3D?

Barkley: I think it may not be so directly related.

But since we released the earliest version of the video model, we have been very focused on the camera's trajectory movement, including how many camera position changes are in the video.

So at that time, users would generally feedback that although the generation results of Luma AI's model were sometimes not so stable, it could provide a lot of camera position movements and complex character motion trajectories.

I think some of the past experiences in 3D can make us realize that improving the richness of the camera positions and the complexity of the motion trajectories can increase the users' willingness to consume the video generation content when we are making visual models.

However, I think there is actually not such a big correlation and reference significance between the past experiences, including the models themselves.

"Intelligent Emergence": So the most important thing for technological transformation is to supplement new technical talents, right?

Barkley: Yes.

To Sustain the Popularity of the Model, Products are Needed

"Intelligent Emergence": After Dream Machine became popular in June, how did you consider the issue of user retention?

Barkley: When we released Dream Machine, we knew that there must be products to meet the continuous and stable needs of users.

For example, as a long-term user of ChatGPT, even if many models with similar capabilities as GPT come out later, you are still likely to choose to use ChatGPT. Because through long-term deep learning, ChatGPT has grasped the user habits and can better understand your intentions.

There will always be better models in the industry, but the product is ultimately the point that can retain users.

"Intelligent Emergence": When did the team plan to make such an AI design platform?

Barkley: This idea actually existed when we first started making the video model. So the product idea was advanced simultaneously with the video model in December last year (2023).

However, in the product design process, we later realized that to cover the entire design process, it must also be able to generate images. So 5 months after the video model was released, when we felt that the image model was good enough, we integrated the two parts into one product at the same time.

"Intelligent Emergence": Who are the target users of the platform? Professional designers or the general public?

Barkley: Actually, we think that the original Dream Machine users were more professional, at least with experience in making AI movies or knowing how to use Prompts to generate better effects.

But in fact, we hope that the current product can be used by people who have not used AI or even have no design experience before. For example, if they need to use such a process in their work, they can easily achieve it through rounds of conversations with AI.

The video model Dream Machine that we released in June actually still has some usage thresholds. At that time, we were thinking that we hope that ordinary people can also access these visual tools, just like GPT in the visual field.

But the visual field is a very niche vertical field. Our idea of making a design platform is how to expand this group. Only by expanding the group can AI in the