OpenAI won the gold medal at the IOI but lost to three Chinese high school students.

Just now, OpenAI officially announced: Won the gold medal at IOI!

Its reasoning model set a new record in this year's online IOI competition:

With a total score of 533.29, it ranked sixth among 330 human contestants globally; and topped the list among all AI contestants.

PS: Among the five people that the AI couldn't outperform, three are Chinese, namely Liu Hengxi (Zhenhai High School in Ningbo), Fan Sizhe (Hailiang Senior High School in Zhuji, Zhejiang), and Chen Xinyang (Hangzhou No. 2 High School).

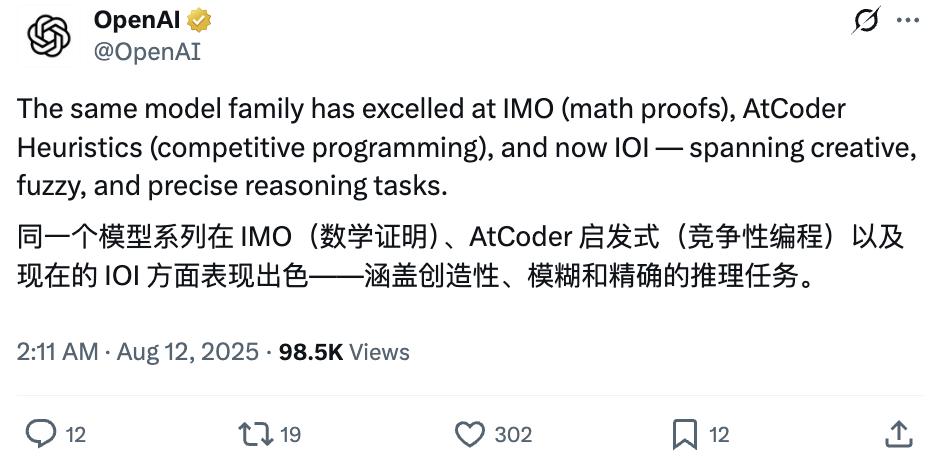

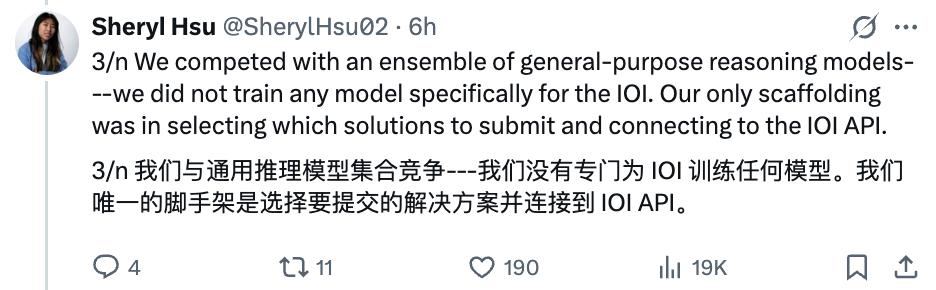

Interestingly, OpenAI stated that it didn't train a new model for IOI this time, but integrated multiple general reasoning models to participate in the competition.

Moreover, the system that won the gold medal is the same one that OpenAI claimed to have won the gold medal at IMO not long ago.

In terms of results, this AI reasoning system has made significant progress compared to last year's model in IOI.

In IOI 2024, OpenAI specifically conducted targeted training on the basis of o1 and finally got o1-ioi, which only scored 213 points under strict competition rules.

This year, it won the gold medal directly with a general model and jumped up the rankings, which shocked netizens.

However, OpenAI's claim that its model won the gold medal at IMO some time ago sparked a lot of controversy. Facing the results of IOI this time, netizens are obviously much more cautious:

Is it really an amazing achievement or just another marketing gimmick...

Meanwhile, many netizens called out for "Give me back 4o".

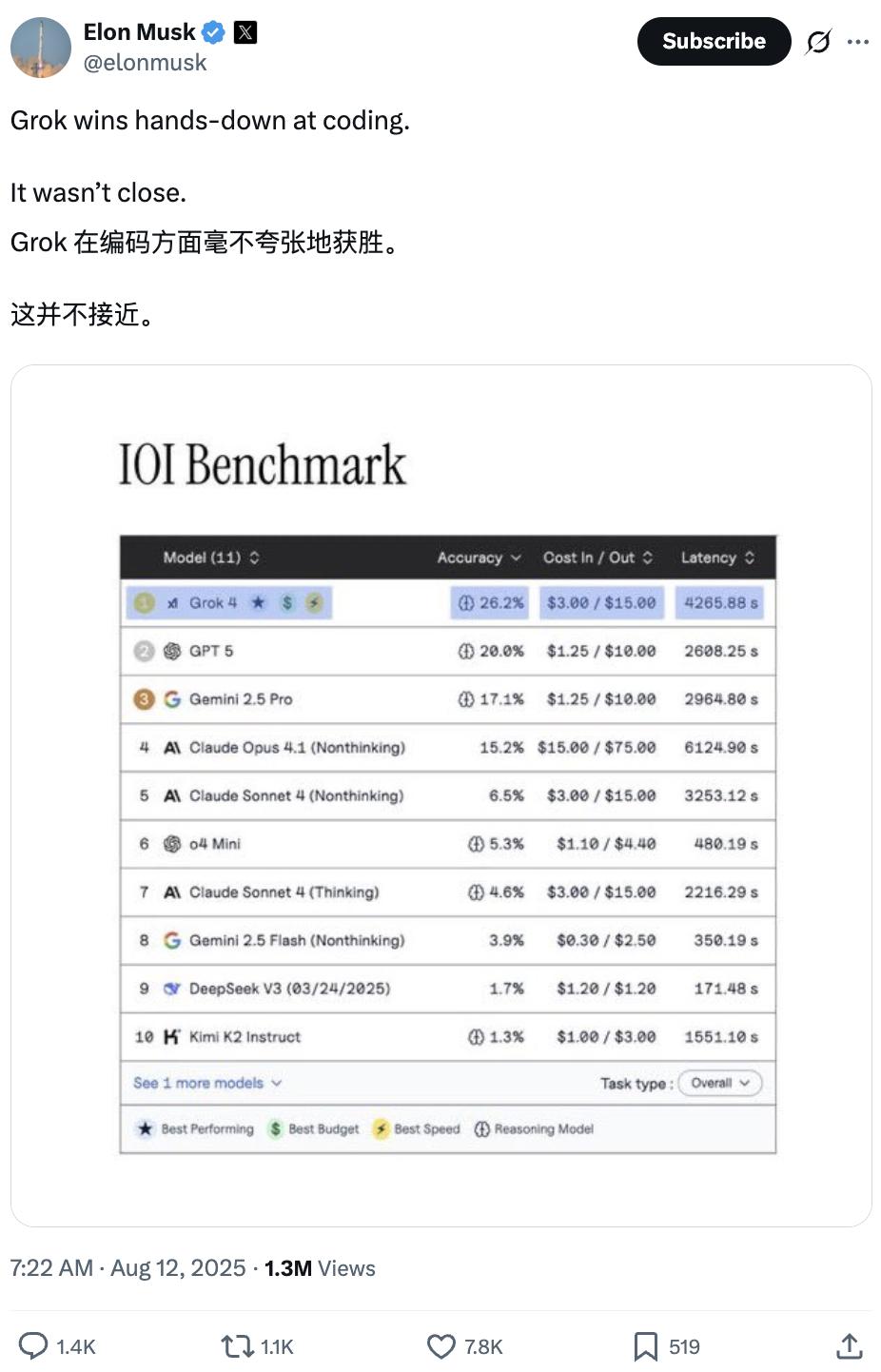

Even more interesting, Elon Musk suddenly released the IOI Benchmark rankings, showing that Grok 4 outperformed GPT-5 in coding and ranked first (doge).

Let's take a look at the details of OpenAI's reasoning system winning the gold medal.

Same as humans, 5 hours and 50 attempts limit

IOI (International Olympiad in Informatics), as the highest-level competition in the field of computer science for middle school students globally, has strict and standardized competition rules:

Contestants need to go through a two-day competition. Each day, they have to independently solve 3 high - difficulty algorithm problems within 5 hours, without internet access and external materials. Finally, they submit C++ code solutions, which are automatically scored by hidden test cases.

There were a total of 330 contestants from 84 countries in IOI 2025. The full score is 600 points. The gold medal cutoff was 438.30 points, and only 28 contestants finally won the gold medal.

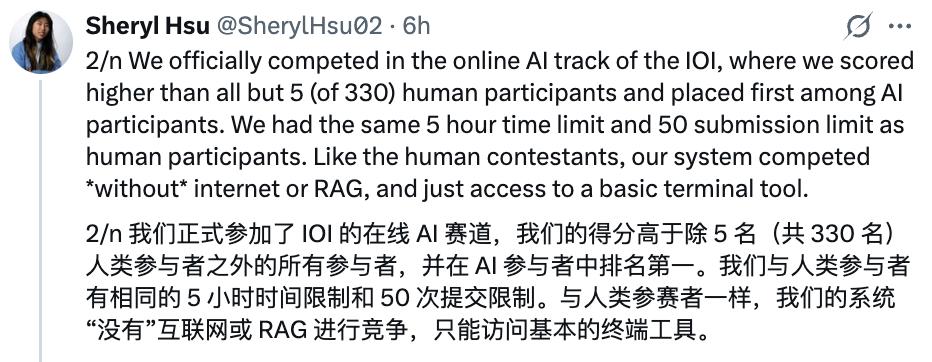

OpenAI said that they officially participated in the online AI track of IOI, with the same rules as human contestants, including a 5 - hour time limit and a limit of 50 submissions.

Moreover, the AI system didn't use the internet or Retrieval Augmented Generation (RAG) technology during the competition and could only use basic terminal tools.

Specifically, they integrated several powerful reasoning models to generate candidate programs, ran these programs, and then submitted the optimal solution. The only auxiliary work was to select the attempts to submit and interact with the competition API.

In the online AI track of IOI, problems are obtained and solutions are submitted through the API, without direct supervision from the competition organizers.

The performance of OpenAI's latest AI reasoning system outperformed 98% of the contestants, showing a sharp contrast with last year in both results and methods.

In IOI 2024, OpenAI used the o1 - ioi model, a dedicated model fine - tuned with reinforcement learning for programming tasks based on o1.

The o1 - ioi heavily relied on a complex and manually designed test - time reasoning strategy (similar to the manually designed test - time reasoning strategy of AlphaCode), including:

- Generate 10,000 candidate solutions for each subtask

- Cluster and rank the solutions based on the test cases generated by the model itself

- Combine the learned scoring function to select the final 50 solutions to submit

Despite a large amount of engineering skills, o1 - ioi only scored 213 points in IOI 2024, ranking at the 49th percentile and missing out on a bronze medal.

One More Thing

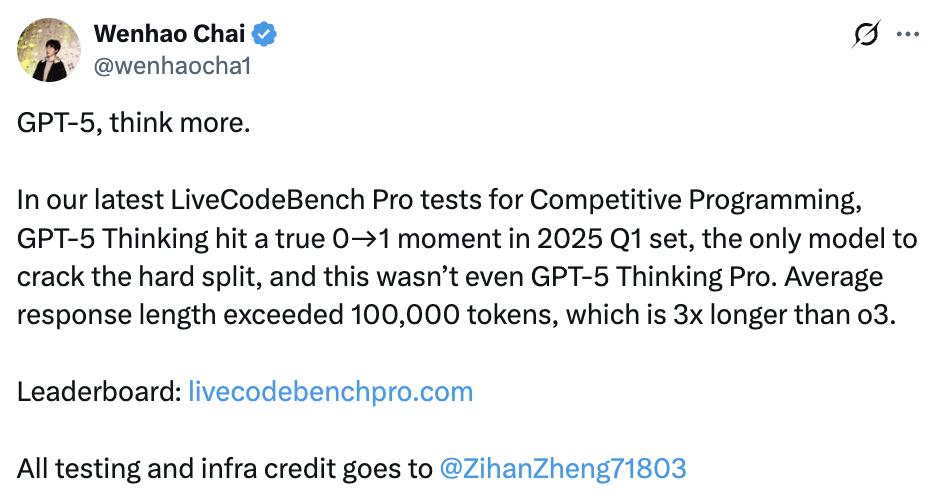

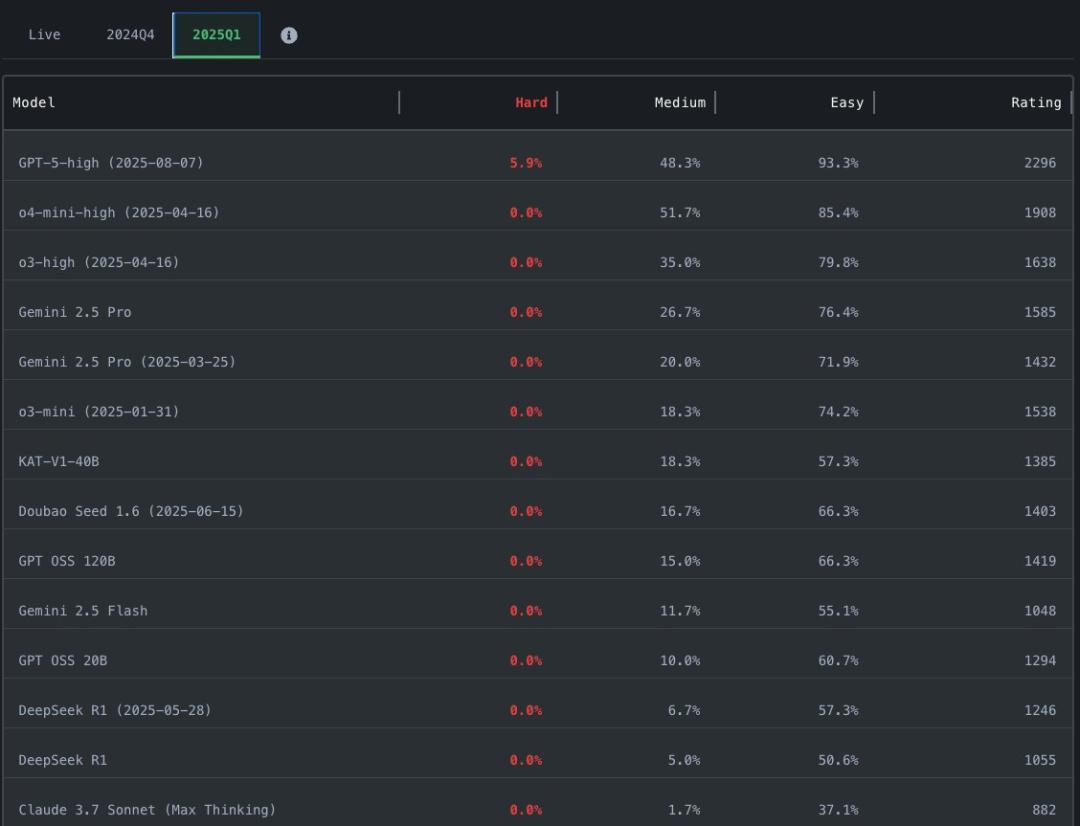

Xiesaining's team recently launched a real - time benchmark test, LiveCodeBench Pro, which includes programming problems at the competition level from IOI, Codeforces, and ICPC.

The latest test results of GPT - 5 are out:

GPT - 5 Thinking made a breakthrough in the test group in the first quarter of 2025. It's the only model that solved the difficult problem group, and this isn't even the more advanced "Thinking Pro" version.

In terms of the average response length, GPT - 5 exceeded 100,000 tokens, three times that of o3.

Reference Links

[1]https://x.com/OpenAI/status/1954969035713687975

[2]https://x.com/rohanpaul_ai/status/1954992741101998099

[3]https://x.com/wenhaocha1/status/1954751124050989213

[4]https://x.com/elonmusk/status/1955047197487272362

This article is from the WeChat official account "QbitAI", author: Xifeng. It is published by 36Kr with authorization.