$100 million can't buy away dreams, but he left OpenAI just because of this sentence from Altman.

The global AI arms race has burned through $30 billion, yet the number of scientists truly working to prevent a "Terminator" scenario is less than a thousand! Benjamin Mann, a core member of Anthropic, reveals that when humanoid robots awaken, all they lack is a "brain" - and this day could arrive as early as 2028.

$100 million, or safeguard humanity?

While Zuckerberg waves around nine - figure checks in a frenzy to "poach" talent, Benjamin Mann, the former security expert at OpenAI, simply remarks, "Money can buy models, but it can't buy safety."

In a recent podcast, Benjamin Mann, co - founder of Anthropic, reveals why the OpenAI security team left and why he firmly said "no" to $100 million.

Top AI talents are heroes of the era and are truly worth $100 million

Recently, Zuckerberg has been spending big to poach top AI talents.

This is a sign of the times. The AI created by these talents is extremely valuable.

Benjamin Mann said that the loss of the team is not severe:

At Meta, the best outcome is to "make money"; but at Anthropic, the best outcome is to "change the future of humanity." The latter is more worthwhile.

For Mann, this choice is not difficult: he will never accept Meta's sky - high offer.

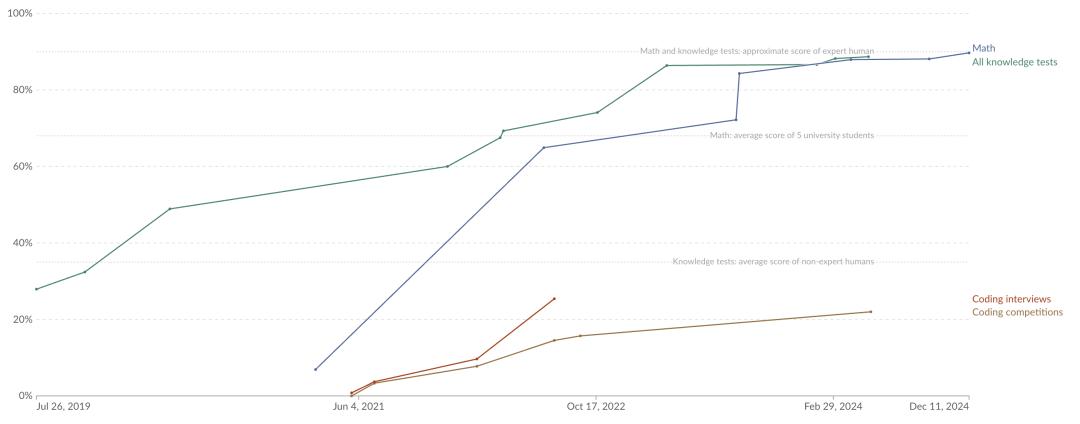

We are in an unprecedented Scaling era, and it will only get crazier.

Anthropic's annual capital expenditure doubles at an astonishing speed.

This is the current state of the industry - a good model is a money - printing machine.

But at Anthropic, Meta's "money offensive" has not caused much of a stir. Apart from a sense of mission, there are two other secrets:

Team atmosphere - "There is no aura of big shots here. Everyone just wants to do the right thing."

Freedom of choice - "I understand if someone accepts a sky - high offer for the sake of their family."

Benjamin Mann confirmed that the $100 million signing fee does exist.

Mann did some calculations: if the inference efficiency is improved by 5%, the cost of the entire inference stack will be saved by hundreds of millions of dollars. A $100 million investment for such an improvement yields an astonishing return.

Looking ahead, after several rounds of exponential growth, the figures could reach trillions. At that time, it's hard to imagine the significance of these astonishing returns.

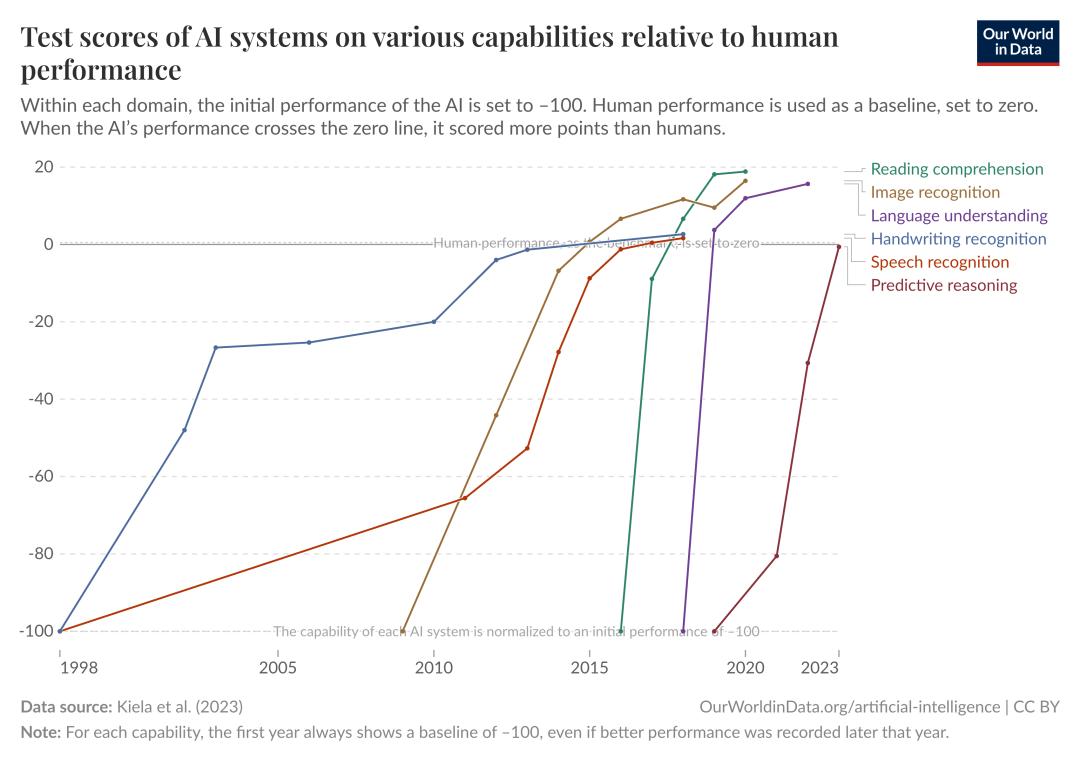

After all, he said that AI development has not stagnated, the Scaling Law is still valid, and the progress continues to accelerate.

The Scaling Law is not dead: a "new species" is born every month

First, the pace of model releases is accelerating:

In the past, we might release one model a year;

Now, through post - training, a model is almost released every month or every three months.

Why does it seem that the progress has slowed down? Part of the reason is that ordinary people think the new models are not as smart as the old ones.

In fact, the Scaling Law is still valid, but to continue the Scaling Law, we need to shift from pre - training to reinforcement learning.

Additionally, on certain tasks, the intelligence of the models is approaching saturation.

After a new benchmark test is released, the performance saturates within 6 - 12 months.

So, the key limitation might be the test itself: better benchmarks and more ambitious tasks are needed to show the real intelligent breakthroughs of the models.

This also led Mann to think about the definition of AGI.

The Economic Turing Test, a vane of the singularity

He believes that the term AGI has strong emotional connotations, and he prefers to use the term "transformative AI".

Transformative AI focuses on whether it can bring about changes in society and the economy.

The specific measure is the Economic Turing Test:

If, after a 1 - 3 month trial period in a specific position, an employer decides to hire someone, only to find out later that it is actually a machine rather than a human, then the AI has passed the Economic Turing Test.

In other words, AGI is not about test scores, but about the employer's hiring email!

When AI can pass the Economic Turing Test for about 50% (calculated by salary) of job positions, it means the arrival of transformative AI.

Because social systems and organizations have inertia, this change will start very slowly at first.

Mann and Dario Amodei both foresee that this could lead to an unemployment rate of up to 20%, especially in white - collar jobs.

From an economic perspective, there are two types of unemployment: lack of skills or the complete disappearance of jobs. The future will be a combination of the two.

If we have super - intelligent, safety - aligned AI in the future, as Dario said, we will have a group of geniuses working in data centers, driving positive changes in science, technology, education, and mathematics. How amazing that would be!

But this also means that in a world where labor is almost free, you can have experts do anything you want. So, what will work look like?

Therefore, the most terrifying thing is that the transition from the world where people still have jobs today to such a world 20 years from now will be very rapid.

But precisely because the change is so drastic, it is called the "singularity" - because this point in time cannot be easily predicted.

In physics, a singularity is a point with infinite density, infinite gravitational force, and infinite spacetime curvature, where the known physical laws do not apply.

The changes at that time will be extremely rapid and impossible to foresee.

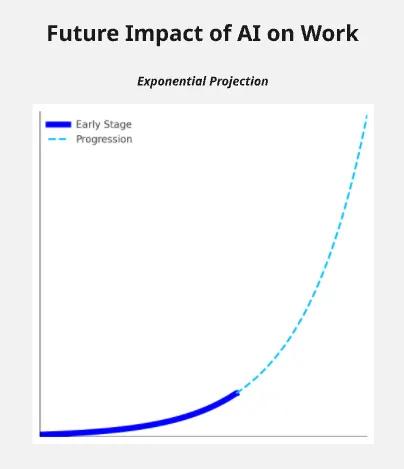

As AI capabilities improve, many tasks originally done by humans are being replaced by AI, especially repetitive, simple, and standardized jobs.

This change has a rapid and profound impact on the workplace.

For example, in software engineering, the Claude Code team has used Claude to complete 95% of the code. But from another perspective, this means that the same amount of human resources can write more code.

A similar situation also occurs in the customer service field. AI tools can automatically solve 82% of customer requests, allowing human employees to focus on more complex problems.

But for low - skill jobs with limited room for improvement, the replacement will be very drastic. Society must prepare in advance.

A survival guide for the future

What will the future workplace be like?

Even though he is at the center of this transformation, Benjamin Mann believes that he is not immune to the risk of being replaced by AI.

One day, this will affect everyone.

But the next few years are crucial. Currently, we have not reached the stage where humans can be completely replaced.

We are still at the starting point of the exponential curve - the flat part, and this is just the beginning.

So, having excellent talents is still extremely important, which is why Anthropic is actively recruiting.

The host changed the question and continued to ask, "You have two children. What kind of education do you think will enable the next generation to survive in the future?"

Mann believes that current education focuses on imparting knowledge and skills, but these traditional standards may no longer apply in the future.

Instead of preparing children just for exams, it's better to cultivate their curiosity and problem - solving abilities.

He hopes his daughter can explore fields she is interested in and develop independent thinking and creativity.

In a world dominated by AI, creativity and emotional intelligence will be the keys to competing with machines.

The future is full of uncertainties. Embracing change, continuous learning, and adaptation will be the keys to success.

Insider story: OpenAI's first split

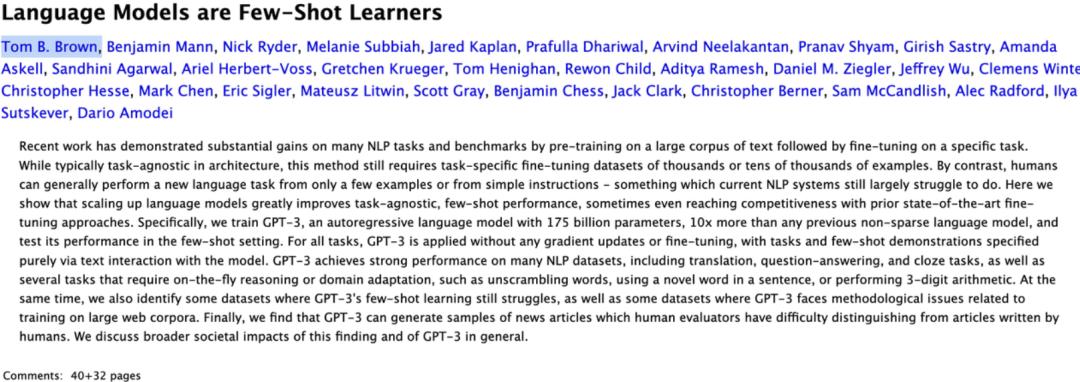

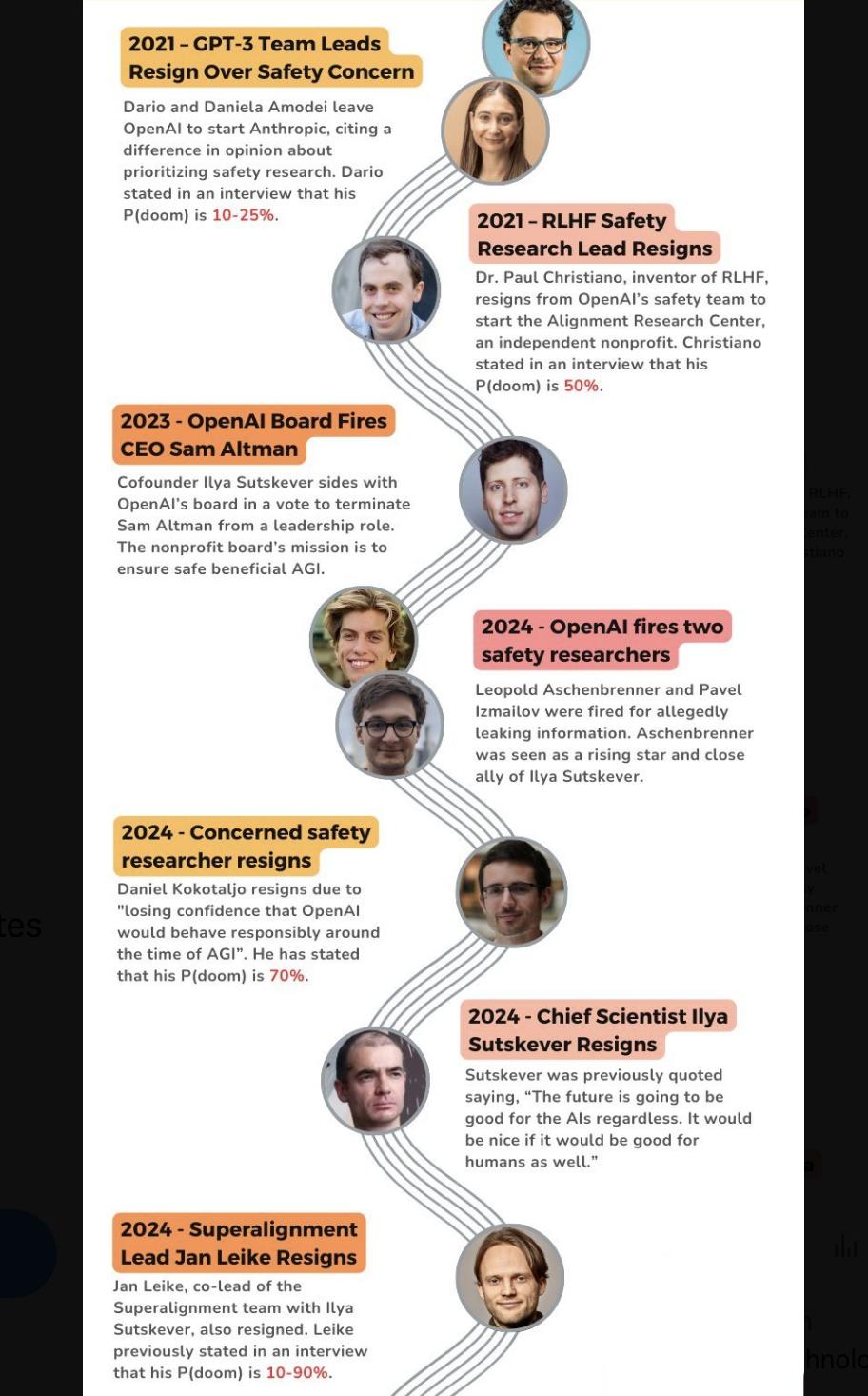

As is well - known, at the end of 2020, Benjamin Mann and eight other colleagues left OpenAI to found Anthropic.

What kind of experience made them decide to start their own business?

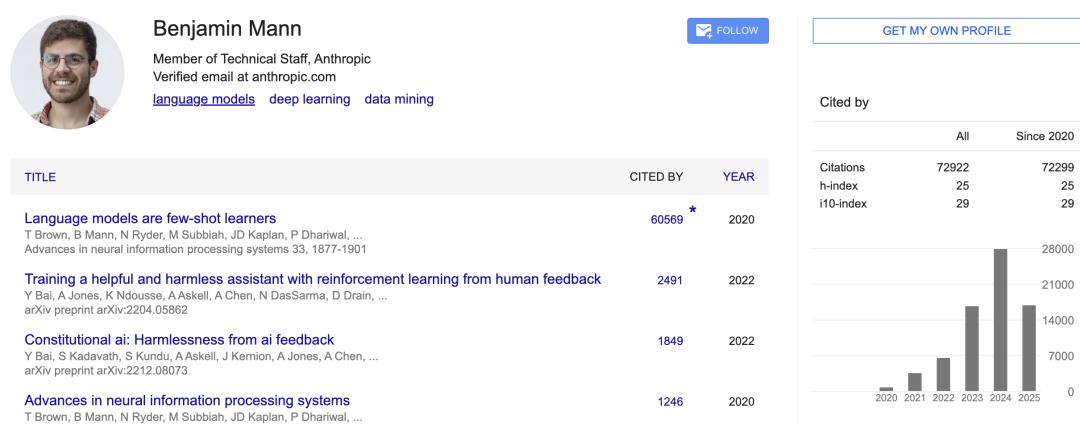

At that time, Mann was a member of the OpenAI GPT - 2 and GPT - 3 projects and one of the first authors of the GPT - 3 paper.

Paper link: https://arxiv.org/abs/2005.14165

He demonstrated many technologies to Microsoft and brought in a $1 billion investment for OpenAI. At the same time, he was also responsible for migrating GPT - 3 technology to Microsoft's system for deployment and service on Azure.

At OpenAI, he was involved in both research and product implementation.

At that time, Altman always said:

OpenAI's three "camps" need to balance each other: the security camp, the research camp, and the entrepreneurial camp.

Mann thought this was absurd - security should be the core goal of everyone, not the responsibility of a certain "camp".

After all, OpenAI's mission is to "make general artificial intelligence safe and beneficial to all of humanity".

At the end of 2020, the security team found that:

• The priority of security continued to decrease;

• Boundary risks were ignored.

Finally, the entire security leadership team left en masse. This is the fundamental reason for their departure.

The reason is simple: AI should put security as the top priority, but at the same time be at the forefront of research.

With issues like "cyber sycophancy" being exposed, it's hard to say that OpenAI really cares about security -

Security researchers are continuously leaving...

Claude is less likely to make such mistakes because Anthropic has invested a lot of effort in real alignment, rather than just trying to optimize user engagement metrics.

Life mission: AI security

There are very few people in the world truly dedicated to AI security issues.

Even now, the global annual capital expenditure on AI infrastructure has reached $30 billion, but Mann estimates that the number of people fully - time researching the "alignment problem" is less than a thousand. This has strengthened their determination to "focus on security".

Mann used to worry that in order to pursue business speed, security would have to be sacrificed.

But after the release of Opus 3, he realized that alignment research actually makes the product more charming, and the relationship between the two is a convex one of "mutual