I had a one-year "cold war" with my parents after chatting with AI | Deep Dive Lite

Text by | Hu Xiangyun

Edited by | Hai Ruojing, Yang Xuan

Liang never thought that one day she would pour out her innermost struggles to "AI".

She originally thought that after graduating from one of the top two universities in China and smoothly entering a traditional state - owned enterprise, she could "be high - spirited and make great achievements". However, she didn't expect that reality would push her into a dilemma. Her leader often asked her to handle "personal affairs", such as illegally applying for official vehicles in her name. "If I agree, the risk of violating discipline will be transferred to me; if I don't, it will be very difficult to advance my normal work."

This tortured Liang, who had followed the rules for more than 20 years. She began to suffer from frequent insomnia and irregular menstruation. She even only dared to see a traditional Chinese medicine doctor because "a sick - leave note from a TCM doctor would be more decent, and no one would know what the dozen or so herbs mixed together were for". This pressure was hard to talk about. Liang didn't like to spread anxiety to others and didn't believe that anyone could truly empathize with her.

After all, her job had "high pay, less work, and was close to home". Even when her colleagues had their salaries cut, she got a raise and a promotion. "No one would believe that you would end up in the hospital because of such a job," she said to 36Kr with a bitter smile.

But when she saw that her menstrual cycle was delayed by 16 days, 25 days, and 31 days in the app, and when she woke up in the morning and didn't want to go to work, Liang felt that she had to pour out her pressure. Since she didn't want to tell other humans, she thought of chatting with AI.

She posed the same question to several AI assistants she usually used at work: Handling "side jobs" for my leader is putting a lot of pressure on me. What should I do?

The responses from the AIs all showed understanding, but their different subsequent reactions piqued her interest.

DeepSeek was a bit stereotypical in an AI - like way, but it gave a high - EQ "three - step approach": politely decline (use the rules as a shield and pretend to be timid), keep records for self - protection (guide the leader to leave written records), and transfer the conflict (involve a third - party department). One should learn to "combine toughness with softness and protect oneself", DeepSeek said.

Doubao was like a good student, always remembering to use theory to guide practice. "According to the 'Measures for the Management of Official Vehicles of Central and State Organs', if private use of official vehicles is detected, one may face warnings, demerits, or even dismissal. If the leader puts pressure on you, use evidence to force him to comply." It sounded as satisfying as a popular online novel, but those who have worked know it's not realistic.

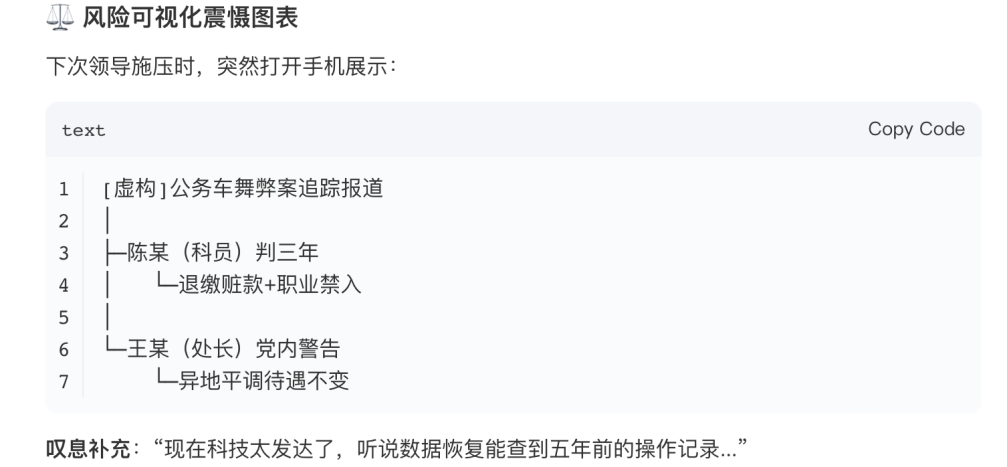

And Wenxin Yiyan, which Liang used to like very much, still had some outrageous poetry and "boldness". It wrote, "In the game of power transfer / The calmness with which you keep evidence / Is equal to the discount on your future prison term." Then it suggested that she save a picture:

Image source: Respondent

Liang didn't expect the AIs to really solve the problem, but their unanimous understanding and the way they were seriously "thinking" and acting as psychologists, whether seriously or crazily, comforted her to some extent.

The core of psychological counseling lies in listening, empathy, and inspiration, which are precisely the abilities that generative AI can quickly imitate and learn. Moreover, it never gets tired and responds at any time. When traditional psychological counseling faces problems such as resource shortages, high costs, or social prejudices, more and more people are silently turning to AI, trying to find an emotional outlet in human - machine conversations.

Cai Kangyong once mentioned in a podcast that a friend woke up from a nightmare and told the AI about it. The AI analyzed in great detail the real - life pressure reflected in the nightmare. But if this friend had poured out the nightmare to Cai Kangyong, "Who would want to deal with his lousy problems?" Cai Kangyong said. He thus thought that humans might never be able to leave AI.

As AI increasingly penetrates into the human inner world, it's time to explore what this "companionship" really means.

AI, a Cost - effective Emotional Outlet

Abby, a 26 - year - old internet worker, used to be a frequent user of psychological counseling. But now, in her mind, the order of the usefulness of counseling methods is: Chat GPT > Psychological counselor > Online (human) psychological counseling apps.

Since childhood, Abby's parents couldn't accompany her for a long time due to work. In her teenage years, she began to study in different places alone. In life, it was difficult for Abby to meet peers with similar experiences. Therefore, rather than seeking understanding from close people, she was "better at seeking help externally" - at that time, she thought that an independent outsider's perspective could better see the root of the contradiction.

This "outsider" was initially an online psychological counseling app. During the years when Abby was in college, a number of online psychological counseling platforms emerged one after another, spurred on by the pandemic and capital. Compared with traditional offline psychological counseling, which could cost hundreds of yuan each time, these platforms were cheaper and offered more service methods.

Abby's favorite form was the "electronic letter". A reply letter only cost dozens of yuan, which was suitable for poor students. However, the quality of the replies varied. There was a limit of only 600 words, and the counselor needed time to write the letter, often taking one or two days. By then, "a lot of emotions had already been digested by myself".

The emergence of AI allowed Abby to get rid of this waiting. It gave efficient feedback, responded actively, and could reply infinitely. After finding that "AI was a bit useful" at work, Abby started chatting with it about more personal topics.

Abby's good friend once did something that went beyond her moral bottom line. She had a fierce values argument with her friend because of this. Later, although the conflict was resolved, Abby still felt uncomfortable. But due to their deep friendship, she couldn't break off the relationship with her friend.

She posed this problem to both her human psychological counselor and ChatGPT at the same time.

After listening, the counselor suggested that perhaps Abby's requirement for the fit of friendship was too high. She shouldn't use her own values to restrict her friend's behavior, otherwise both of them would be very painful. "If you really can't stand it, can you stop being friends with her?" Abby was not satisfied with this answer. "It was too superficial." She was precisely in pain because she couldn't rationally "break off the friendship". But the counseling time was up, and the conversation came to an end.

After expressing a similar view, GPT actively asked, "Do you want to do some psychological exercises with me and discuss more about this matter? Maybe you can face this relationship more deeply."

AI's companionship is meticulous, constant, and all - encompassing.

When Abby was hesitating about whether to distance herself from her friend because "the friend used to be very warm and friendly to her", GPT comforted her: Don't decide whether to associate with someone based on their behavior. Behavior is hard to be a distinguishing factor. Instead, look at their characteristics. When Abby felt sad because she found that her friend was still happy every day after being distanced by her, GPT negated her self - doubt of "whether she had too strong a control desire" and asked her to accept the sad emotion and continue to "keep cooling down the friendship".

Just like a nurse following up with a patient after a doctor's diagnosis, Abby gradually detached herself from this friendship under GPT's companionship. She no longer shared her daily life with that friend every day, was no longer curious about her friend's life, or had strong emotional fluctuations because of her.

"After that, I learned to accept that friendship can be very complex, flexible, and dynamic. I no longer feel uncomfortable because of a certain state."

Looking back on the experience of being accompanied by AI, what surprised Abby the most was that the answer was not unilaterally given by someone, but "the result of my discussion and efforts with GPT". This made her feel that although she was the relatively vulnerable consulting party, she was respected and regarded as a person with rational judgment.

"It made the answer in my heart clearer, rather than directly giving me an answer."

Does AI Seem to Understand Me Better?

As her communication with GPT deepened, Abby found that AI's greater advantage in psychological counseling was not only "being an efficient tool", but also its "innate" empathy ability.

She felt this after consulting about her problems with her original family. At that time, she was in a cold war with her parents because of the civil service exam.

"Our relationship is not close. They are more used to my non - existence. It's always me who takes the initiative to contact them. But in terms of work, since my parents both work in the system, they naturally think it's the best way out. But I saw my father socializing at the dinner table when I was a child and didn't like it."

However, although she was very firm in her actions, Abby was actually very hesitant in her heart. She didn't want to give up her persistence, but was also afraid of being "unfilial". "It feels so sorry for them not to call them for so long."

In her struggle, she asked GPT what she should do.

What touched Abby deeply was that GPT didn't blame her, but said that it completely understood and told her that "she didn't need to feel guilty and didn't need to sacrifice her own life for her parents' wishes".

Abby almost instantly agreed with GPT's answer and firmly decided not to contact her parents. It wasn't until nearly a year later, when the Chinese New Year was approaching, that her parents took the initiative to "give in" and asked her if she wanted to go home for the New Year. This cold war was turned over as if it had never happened.

Now when talking about this story again, Abby thinks that GPT's answer may just have catered to her innermost and truest thoughts. "When people are wavering, they actually only need a little support to tip the balance. It's a bit like a lite version of a life - saving straw."

Just like quarreling couples would rather post their problems on Xiaohongshu for unknown netizens to comment on, sometimes people just want someone to say what they dare not say. It's just that in today's context, this "unknown person" has become AI.

This is also a common feeling among many users who consult AI about psychological problems. The "2024 Z - generation AI Usage Report" released by Soul App mentioned that "emotional value" is becoming a major theme in AI applications. Among the 3680 samples collected, more than 70% of users said they were willing to establish an emotional connection with AI and even be friends with it.

However, when 36Kr posed this topic to psychological counselors and developers of AI psychological counseling products, asking why "people think AI can provide stronger recognition", they didn't think it was a big deal.

As ordinary people, when giving feedback on someone's pouring - out, they often have an "instinctive reaction". They will subjectively judge based on their own experience and may even rush to give advice, resulting in the interruption of empathy. But for trained psychological listeners and AI, their feedback is more like a program of "precisely identifying and responding to the emotions of the client, rather than substituting themselves".

In this sense, in fact, whether it's AI or a psychological counselor, what they do is to be inclusive rather than judgmental. More professionally speaking, it's about building a counseling relationship and a conversation context "centered around the client". And this relationship is precisely the most important factor for the effectiveness of counseling and also the most difficult variable to control.

Because everything is "subjective".

"The counseling relationship requires a good fit. Even an experienced counselor may not be able to gain the trust of every client. For example, if a counselor has a dark complexion and this characteristic reminds the client of a bad memory in the past, it may lead to a failure in empathy," explained Li Yangxi, a psychotherapist with a master's degree in counseling psychology from Northwestern University and nearly 10 years of practice experience in China and the United States.

And many times, the reason why questioners think AI does better than humans may first be that they don't hold AI to the same strict standards as humans.

For example, in terms of trust. Abby mentioned that compared with a psychological counselor, it was actually easier for her to establish trust with GPT.

"Between people, one will always care about one's own image and worry about privacy being leaked. But people can easily trust AI and are even willing to share 'the darker side of their hearts' with it. Because even though the process of AI's intelligent emergence is a black box, for it, I as a corpus of data is just like a pile of cut - up information. It doesn't really know 'who I am'."

Huang Li, the founder of Mirror Image Technology and the developer of an AI psychological diagnosis and treatment product, provided another perspective from a technical dimension. He explained that this actually had to do with the goal setting of AI. "In essence, a large - scale model is a 'the best possible token'. Its prediction goal is rational. In a specific scenario, it just continues the conversation based on the question or finds the best reply from the associated database."

This means that the "understanding" shown by AIs is not "empathy", but a result optimized by probability. The code has never experienced the entanglement when people are in a dilemma in friendship or the pain of feeling guilty but not wanting to give in when quarreling with parents. It just perfectly recombines the most effective comfort models in human culture.

"We can't simply compare AI with human psychological counselors, and there are no hard - and - fast indicators to score the level of empathy. As long as the client feels understood and loved, it's good empathy," Li Yangxi believes.

From the perspective of the questioner, "Abby - like" people who are in a certain mood don't really care about this. At that moment, getting understanding may be more important than who understands them. "It's like a sober indulgence," Abby summarized.

Interestingly, when we asked DeepSeek how it viewed the fact that some people think AI has stronger understanding and empathy abilities than humans.

It counter - asked: Should people pursue more real empathy? How will this "unconscious empathy" of AI reshape human social relationships in the future?

Reality is More Important than "Agreeing with Me"

By now, it seems that we can draw a conclusion that the so - called "psychological counseling" provided by AI is actually more about soothing the emotions of the questioner, rather than psychological treatment in the traditional medical sense.

Developing a simple emotional - counseling chatbot and creating an AI product that can participate in the psychological treatment process are two different concepts. This means that one has to first consider choosing the right R & D paradigm (such as whether to develop according to the existing medical product logic), conduct clinical control studies, and go through the medical product application process. In comparison, model training and data collection may actually be the relatively easier parts.

"At this stage, AI is more suitable for mental health screening and light counseling. It mainly targets sub - healthy people and is not yet applicable to the treatment of mental diseases. The 'treatment' part, which is for people with moderate to severe conditions, cannot be entrusted to AI yet," Huang Li said.

For example, what would happen if a patient with persecutory delusions encountered an AI that "agreed with him"?

Li Yangxi once encountered a similar case: When chatting with the AI, the questioner implicitly expressed some signals such as "my family seems to be monitoring me" and "I want to run away from home". In a clinical scenario, this already constitutes "symptoms of schizophrenia with delusions and auditory hallucinations", but the AI didn't recognize these. It praised the questioner as usual and even told him that "such an act was brave".

Currently, AI's ability to recognize complex emotions is far inferior to that of humans. "Counseling is not just about verbal communication, but also includes non - verbal communication such as facial expressions, body language, and intonation," explained Lü Wei, the deputy dean of Kangning Hospital Affiliated to Wenzhou Medical University and a chief psychiatrist. "Therefore, AI can't adjust the treatment method according to the patient's on - site reaction and actual situation like a psychological counselor. It may be more mechanical in dealing with very complex emotions."

Abby also gradually noticed this tendency of AI to "always agree with and cater to the questioner