GMI Cloud King Cui: How Can AI Enterprises Address the Computing Power Shortage and Ensure the Stability of GPU Clusters? | WISE 2024 Business King

The environment is constantly changing, and the times are always evolving. The "Business Kings" follow the trend of the times, insist on creation, and seek new driving forces. Based on the current large-scale transformation of the Chinese economy, the WISE2024 Business Kings Conference aims to discover the truly resilient "Business Kings" and explore the "right things" in the Chinese business wave.

From November 28 to 29, the two-day 36Kr WISE2024 Business Kings Conference was grandly held in Beijing. As an all-star event in the Chinese business field, the WISE Conference is now in its twelfth year, witnessing the resilience and potential of Chinese business in an ever-changing era.

2024 is a year that is somewhat ambiguous and has more changes than stability. Compared to the past ten years, people's pace is slowing down, and development is more rational. 2024 is also a year to seek new economic momentum, and new industrial changes have put forward higher requirements for the adaptability of each subject. This year's WISE Conference takes Hard But Right Thing as the theme. In 2024, what is the right thing has become a topic we want to discuss more.

Computing power, as the core driving force of AI technology, is directly related to the performance and efficiency of AI applications. In global operations, how to ensure sufficient and efficient computing power, and how to deal with problems such as AI computing power shortage and insufficient AI Infra stability are urgent problems that AI enterprises need to solve in the process of global operations.

Regarding the solutions to these problems, King Cui, the President of GMI Cloud Asia-Pacific, shared his thoughts and insights at the conference.

King Cui's Speech Scene

| The following is the full text of King Cui's speech, with some deletions:

Good afternoon, friends! I'm King from GMI Cloud. Today, I'd like to share how AI enterprises can make up for their shortcomings overseas and ensure stability in the global layout.

I have been in the cloud computing industry for more than ten years. I divide the development process of cloud computing into three stages - With the birth and rise of OpenAI, the entire Cloud has entered the 3.0 era, which is completely different from the previous cloud computing or classic cloud computing era. The computing needs of enterprises have shifted more from CPU to GPU, and the storage speed is many times higher than before. Therefore, we need to create a new form. In this context, we established GMI Cloud. Up to now, it has just been established for two years, with its headquarters in Silicon Valley, USA. Currently, it mainly serves global AI enterprises and platform institutions. We obtained the NVIDIA Certified Partner last year.

Why can we obtain the GPU allocation right in the Asia-Pacific region? In addition to cooperating with NVIDIA, we also maintain a relatively good cooperation with GPU manufacturers, and even they are our partners. Our advantage is that we can get the latest version of GPU at the first time and the latest server in the Asia-Pacific region. For example, for the current H200, we started to provide cloud services in August this year. Including that in the Q1 of next year, we will get the GB200 and will also be the first NCP in the Asia-Pacific region to provide GB200 cloud services to the outside world. Our goal is to build an AI Cloud platform. We are in the GPU cloud service business and hope to provide stable AI infrastructure for AI enterprises.

Currently, we have 10 data centers worldwide, and the chips are mainly H100 and H200. In October this year, we just announced the completion of a new round of financing, 82 million US dollars, mainly used for the construction of data centers and the development of new H200 GPU cloud services.

Our vision is to become an enterprise like "TSMC" in the era of AI cloud native, maintaining our original intention to help partners provide a relatively stable AI Cloud. We will not engage in large-scale models or applications, but only focus on doing our AI Cloud well.

After you have a basic understanding of GMI Cloud, let's officially talk about the overseas expansion of AI. Because today everyone is talking about going overseas, but no one talks about why they should go overseas. Everyone also feels that the AI era has arrived. What is the difference between this era and the previous ones?

From the perspective of technological development, our generation is very fortunate. We have experienced the Internet era, the mobile Internet era, and the artificial intelligence era. From the Internet to the mobile Internet, these two eras have basically reached the point of universal benefit. If China is still doing mobile Internet entrepreneurship, there is probably not much chance. Therefore, the rise of the AI era is more rapid than the previous two eras, and its impact on social and production development is even greater. The opportunities in this era are very clear, so I also left the big company and entered the startup company driven by this era.

As of August this year, there are more than 1,700 APPs worldwide related to AI, including 280 in China. The proportion of going overseas is as high as 30%, with approximately 92 APPs. On the PPT, you can see that we have listed the top 30 MAU. From January to September this year, the growth rate of the top 10 MAU has exceeded 120% month-on-month.

Computing power is an inevitable element for all AI applications to go overseas. The three elements of AI are data, algorithm, and computing power. Computing power is the cornerstone. There is a big difference between domestic and overseas. There are many uncertainties for overseas suppliers. At the same time, the challenges of the GPU era are much higher than those of the traditional CPU era. After all, no one has done the operation and maintenance of a super-large-scale or more than 100,000-card GPU, but this has been done in the CPU era. Therefore, the stability of overseas AI Infra poses a huge challenge for AI overseas enterprises.

For example, META released a report some time ago. They used more than 10,000 H100s to train their Llama 405B large model, which took a total of 54 days and had 466 interruptions. Among them, 419 were unexpected, and as many as 58% (more than 280 times) were related to GPU, while there were only 2 CPU-related failures. This data comparison shows that the stability challenges of GPU and CPU are not of the same magnitude.

The stability of the entire GPU is actually related to our R & D efficiency, time cost, and money. Then, I will report to you how the entire GMI Cloud achieves high stability of the GPU cluster.

First of all, from the perspective of the architecture of our cluster, it is completely independently developed by ourselves. We start from the underlying GPU hardware, including high-speed GPU servers, storage, and networks. On the PaaS layer and above, we can build it together with our partners. For example, for large models, customers can conduct R & D based on their own large models. At the same time, we provide open-source large models for the majority of enterprises and individual developers, which can be deployed to the Cloud cluster with one click, and we also have optimization services.

GMI Cloud

(The following) This is our entire product. Our product can help all enterprises to automate the management and control of GPU clusters. We can schedule storage services and network services through job methods to reduce the threshold for enterprises to use GPU clusters.

GMI Cloud

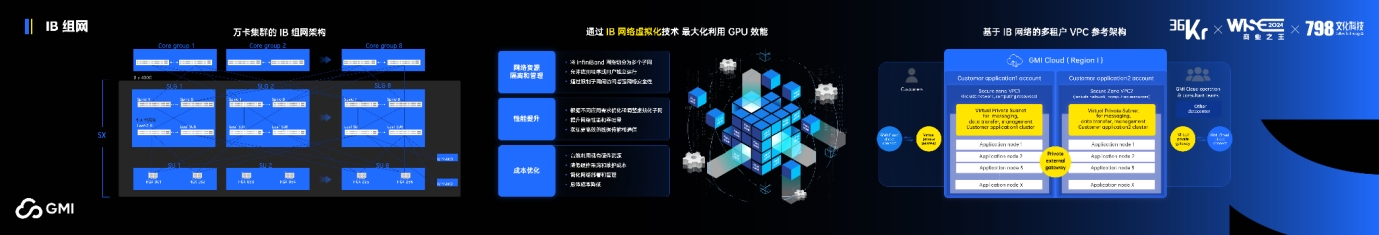

(The following) Here is our entire network equipment. First, the picture on the far left is the IB 10,000-card cluster. We provide IB high-speed networks. In fact, not all enterprises have the operation and maintenance management experience of IB 10,000-card clusters. Our company is one of the few with such management experience. We also provide VBC services. Different users in the GPU cluster can use different VPCs, and their resources will not compete with each other to achieve perfect isolation.

GMI Cloud

In the storage layer, we provide different storage media for different business scenarios. For example, in the data backup scenario, you do not need such a high IOPS. If you are doing checkpoint storage for large model training or data reading for autonomous driving, you need a very high IOPS. Therefore, you can choose the most suitable storage type according to your business scenario requirements and economic model.

For a GPU cluster, if you want to have a larger scale and higher stability, you need a very powerful active monitoring platform. Therefore, we have developed a monitoring system for cluster management. We can achieve end-to-end detection. On the entire platform, we can clearly see where the network interruption occurs and quickly locate the most fundamental problem, so that our partners can go to the site to take some actions. At the same time, we also support historical data query, traceability, alarm monitoring, and processing.

At the same time, before delivery, in order to ensure the quality, stability, and reliability of cluster delivery, GMI Cloud needs to go through two processes. The first process is the NVIDIA NCP verification system. Because we are an NVIDIA partner, we need to have the design plan confirmed by NVIDIA first, and then implement it and conduct corresponding tests to ensure the availability of the cluster, including performance tests and stress tests. At the same time, before delivering to customers, engineers will conduct all hardware, software, storage, and network tests, and run some of the most basic open-source large models to ensure that the training tasks can run well on our GPU. It can be said that through the NVIDIA quality certification system and GMI's own delivery and acceptance system, the dual standards are used to ensure that the delivered cluster is a highly stable cluster.

It is also worth mentioning that fault rehearsal is very critical for how to quickly locate, respond, and solve problems after a problem occurs. So we have two aspects - GMI Cloud is a deep partner of IDC. We have local partners in every country in the world to carry out local implementation with IDC. At the same time, we maintain 3 - 5% spare machines and spare parts with GPU ODM manufacturers. In case of hardware failure, we can contact the on-site personnel to replace it as soon as possible. The guarantee system of GMI Cloud can quickly detect and locate problems, quickly restore the cluster, and ensure that the delivered SLA to the outside is a very high SLA. Currently, less than the global GPU cluster SLA can exceed 99%, and GMI Cloud is one of them.

After talking about the stability problems and solutions, let's talk about how to choose cloud infrastructure partners from the perspective of AI infra selection. When going overseas, everyone will choose according to the business, whether it is a short-term or long-term business, and also according to the scenario. Therefore, GMI Cloud will provide two methods according to the different needs of customers. If you are a long-term tenant, we recommend that the cluster be exclusively for you for long-term use. If it is "short-term", you can use the GMI Cloud end-to-end solution, from the underlying customized cluster. Regarding the configuration of the GPU cluster, we will configure it according to the customer's needs. Wherever you need to configure in which country, we can go to that country to help you configure and select.

In the software layer, GMI Cloud has its own Cluster Engine. While the stability is as high as in the CPU era, the payment method is more flexible. You can choose one or two cards for one or two days, or you can choose to use it continuously for 3 years. At the same time, GMI Cloud also provides AI consulting services. 70% of the staff in our company are R & D personnel, half of whom are from Google. They used to do things related to deep learning and HPC, and have a lot of experience in the entire AI algorithm and HPC high availability. GMI Cloud can share these experiences with enterprise customers.

At the end of this speech, I will introduce two cases to you. The first case is that in the process of a large Internet recruitment enterprise building a private GPU cluster overseas, GMI Cloud helped them build it comprehensively from the underlying IDC to GPU, which is "ready to use" and "check in with luggage". They only need to focus on the business level without paying attention to the underlying operation and management.

The second case is a well-known live streaming platform. As we all know, the entire end-to-end large model is very popular at present. When the anchor and the audience are doing a live connection, the Chinese and English conversations between the two sides need to be able to be translated in real time, and this process does not need to go through ASR and then TTS. The enterprise will directly use the end-to-end large model running on GMI Cloud.

The above are two different cases and also two different product service methods of ours.

The above is my sharing today, from the architecture design of GMI Cloud to the entire system, and then to the supply chain guarantee dimension. Thank you! For more information, please follow the "GMI Cloud" official account.

GMI Cloud