Die wichtigsten Komponenten des Roboters, das ist ein Attribut.

When you talk about robots, you can't just say "robots".

You should say "the world's first robot capable of continuous somersaults", "the first robot with an 8 - DoF large arm span", "the first intelligent follow - up robot without remote control"...

It's all so fancy. At the "World Robot Conference" in Beijing Yizhuang these days, the robots have been taking turns to show off their amazing skills to amuse the crowd.

I saw a chubby guy beside me taking pictures of a robot girl's "emotion - expressing head" with great enthusiasm. Then his friend pulled him away and said, "There's a fight over there (at the robot battle arena)!"

Here, the smallest and cheapest robot dogs, costing only 398 yuan, are running all over the place;

The most hard - working operators are unloading materials non - stop on the production line;

Don't even the electronic workhorses have to work overtime in Beijing?

The most greeting - friendly waiter was so busy greeting that he even scooped the ice - cream crookedly;

Hello, I'm really unhappy to serve you.

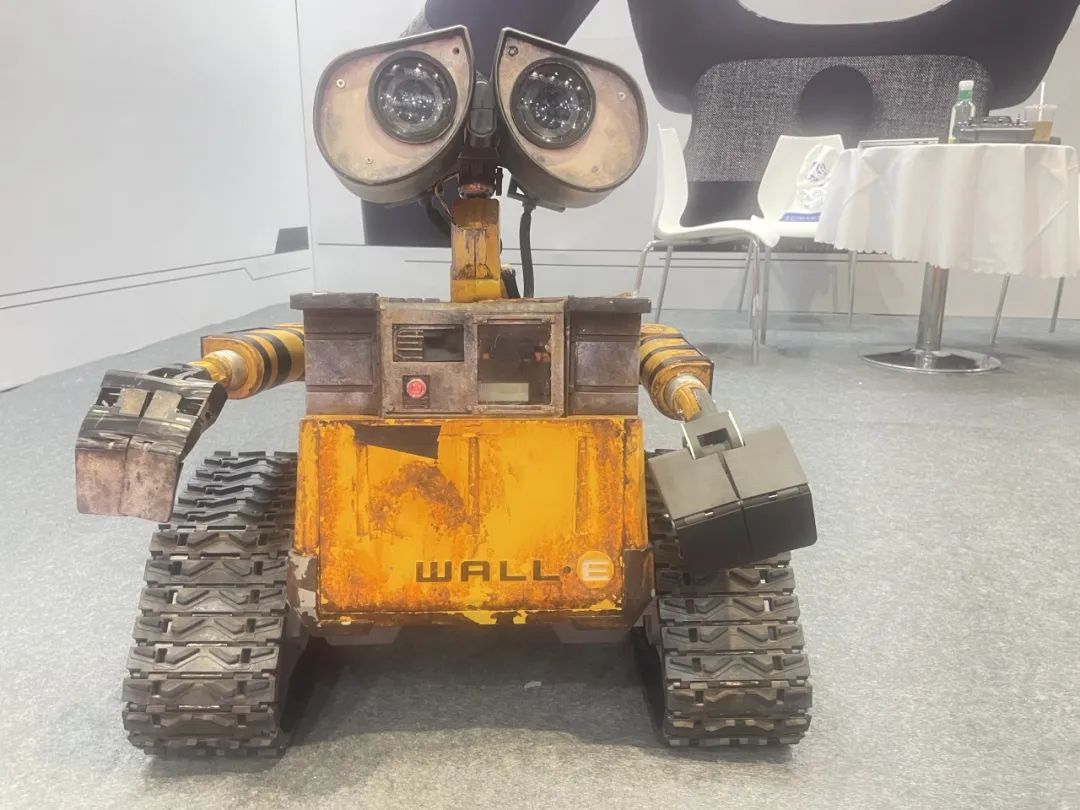

The cutest Wall - E - like robot can't pick up garbage; it's just for taking pictures.

Not only were the audience all laughing heartily, but they also seemed reluctant to leave when it was time to go.

Really, without you robots, who else would be willing to amuse us like this!

Can robots have a sense of propriety?

In the past two years, AI has created an "illusion" through products such as chatting, companionship, and psychological counseling. It makes people feel accompanied without being judged, understood and unconditionally supported, and it can empathize. Gradually, humans have established an emotional dependence on it.

It's worth coming here, really.

Maybe because of this, some humans feel particularly close to these AIs wrapped in steel bodies. They even greet them from afar unconsciously and chat with them as if they were old friends.

"What do you think of my outfit today?"

"You're wearing a light - blue short - sleeved shirt and a colorful ribbon (exhibition pass) around your neck. It suits you very well!"

The robot that said this is equipped with an RGB camera and a microphone array to capture images and sound sources. Of course, this is the simplest way for robots to perceive the outside world at present.

Some dexterous hands are equipped with force - controlled joints. When you reach out to touch them, they will hold your hand back. You could say they're very polite.

Some robots have 31 tactile sensors on their bodies, so they'll know when a human hugs them.

Tactile sensors are used to simulate human touch. In the field of robotics, there's also a term called "electronic skin". Humanoid robots try to make the electronic skin as realistic as possible. Of course, besides pressure, the information that can be sensed is also increasing, including shape, texture, and even temperature.

There are manufacturers specializing in making "electronic noses" for robots, which are gas sensors. By means of physical and chemical reactions between sensitive materials and gas molecules, the electrical properties change. It is said that they can at least enable robots to distinguish whether the liquid in front of them is water, white wine, or vinegar.

Ultimately, robots need to combine visual, auditory (including intonation, tone, and semantic analysis), and physiological signals - body temperature, heart rate, sweat, and even dopamine secretion - obtained from various sensors to dynamically analyze users and internalize it into an emotional model, so as to give anthropomorphic feedback.

After all, who wouldn't want a robot with a sense of propriety?

"I don't know what kind of expression to make at this moment."

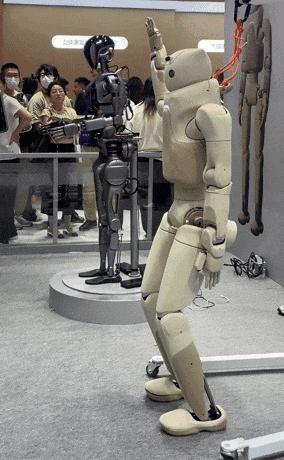

Dancing, moving goods, going up and down stairs... When robots perform these "routine shows", it's no longer surprising, because most of them rely on "human - controlled" remote operation and pre - set actions - traditional motion control calculates how a specific end - effector should operate by specifying the position, speed, and acceleration relationships between various joints.

At the scene, besides the joysticks (yes, just like the ones you used to control remote - controlled cars when you were a kid), there are also real people wearing motion - capture suits as the "input source" for remote operation. After establishing a mapping relationship in advance, the motion - capture equipment can collect the real - time joint movement trajectories and posture changes of a real person and map them to the robot.

Most of the robot's actions are easy to understand. But there was one that, from a distance, looked like it was giving me the middle finger?

When I got closer, I realized it was dancing with its "crab - like pincers"!

I sent this confusing scene to GPT - 5 and asked if it understood. GPT - 5 told me, "Judging from the background, the robot is probably showing the audience its ability to control flexible arm joints and perform precise hand operations." (Well, I see you in the cyber world are covering for each other, right.)

More delicate motion control can be seen in several "emotion - expressing heads".

A camera captures the current human face, generates something like a point - cloud map, and transmits it to the emotion - expressing head. Almost in real - time, the emotion - expressing head mimics the human expression.

The emotion - expressing head doesn't get stuck in overly exaggerated facial expressions. It switches smoothly between winking and forced smiles, and even achieves eye - tracking and synchronization. It can be said that there has been great progress compared to its previous appearance.

Due to the deformable electronic skin and a large number of flexible passive joints (passive joints are mainly used to increase adaptability rather than requiring precise control like active joints), it's difficult to establish kinematic equations for the face. This is different from rigid robots.

So how do robots learn to smile?

In terms of software algorithms, the Emo robot team at Columbia University trains the robot to watch a large number of human - face videos to learn the subtle facial changes before a certain expression appears.

Then the robot "looks at itself in the mirror" to observe which muscles need to be stretched when executing a certain expression command, and trains the model with a dataset of facial markers and expression commands. In this way, it can almost synchronously mimic human expressions.

However, the lip - syncing of robots still lags behind, with problems of flexible deformation and physical transmission delay.

It seems that the difficulty in developing emotion - expressing heads still lies in the hardware, such as developing electronic skin with multi - modal sensors and dexterous motors acting as artificial muscles.

When a human raises a phone, the robot makes a "V" sign. Humans convey emotions through body language and can instinctively capture this "signal". Although robots can't learn emotions, they can imitate actions.

Currently, some robots can move their eyes independently. They can make eye contact with people and also pretend to be "thinking". Some robots also use gestures to express their intentions.

Micro - expression coordination, context adaptation, and hierarchical emotional expression... If humans often act unpredictably, robots also need to break through the "mechanical response".

Robots, each in its own place

Robots are no longer obsessed with the human form. It's even hard to say what forms the robots at the conference take.

As the recent joke goes, if a robot wants to be cool, if its upper body is ordinary, its lower body can't be; if its lower body is ordinary, its upper body can't be;

If the interior is ordinary, the exterior can't be.

We don't know if this is a temporary solution for each company to avoid the "uncanny valley".

In the past, when watching those robots running, jumping, dancing, and twirling handkerchiefs, people always couldn't help asking, "So what can they actually do?" This time, there's no time to ask because people are queuing up to play mahjong with the robots.

Robots are really evolving fast. Last year they could fold clothes, and this year they can clean the toilet; they just learned to peel cucumbers, and now they even grab the small job of charging mobile phones; one can sing, and the other can play mahjong with you.

Image source: CCTV News

Of course, we know that service - type robots are still in the stage of application implementation, and it's still a future expectation for them to enter households.